Chapter 7 Individual Differences in Cognitive Strategies (Multilevel modeling)

7.1 Introduction

Our exploration of decision-making models has so far focused on single agents or averaged behavior across many agents. However, cognitive science consistentlyreveals that individuals differ systematically in how they approach tasks and process information. Some people may be more risk-averse, have better memory, learn faster, or employ entirely different strategies than others. This chapter introduces multilevel modeling as a powerful framework for capturing these individual differences while still identifying population-level patterns.

Multilevel modeling (also called hierarchical modeling) provides a powerful framework for addressing this challenge. It allows us to simultaneously:

- Capture individual differences across participants

- Identify population-level patterns that generalize across individuals

- Improve estimates for individuals with limited data by leveraging information from the group

Consider our matching pennies game: different players might vary in their strategic sophistication, memory capacity, or learning rates. Some may show strong biases toward particular choices while others adapt more flexibly to their opponents. Multilevel modeling allows us to capture these variations while still understanding what patterns hold across the population.

Consider our matching pennies game: players might vary in their strategic sophistication, memory capacity, or learning rates. Some may show strong biases toward particular choices while others adapt more flexibly to their opponents. Multilevel modeling allows us to quantify these variations while still understanding what patterns hold across the population.

7.2 Learning Objectives

After completing this chapter, you will be able to:

Understand how multilevel modeling balances individual and group-level information

Distinguish between complete pooling, no pooling, and partial pooling approaches to modeling group and individual variation

Use different parameterizations to improve model efficiency

Evaluate model quality through systematic parameter recovery studies

Apply multilevel modeling techniques to cognitive science questions

7.3 The Value of Multilevel Modeling

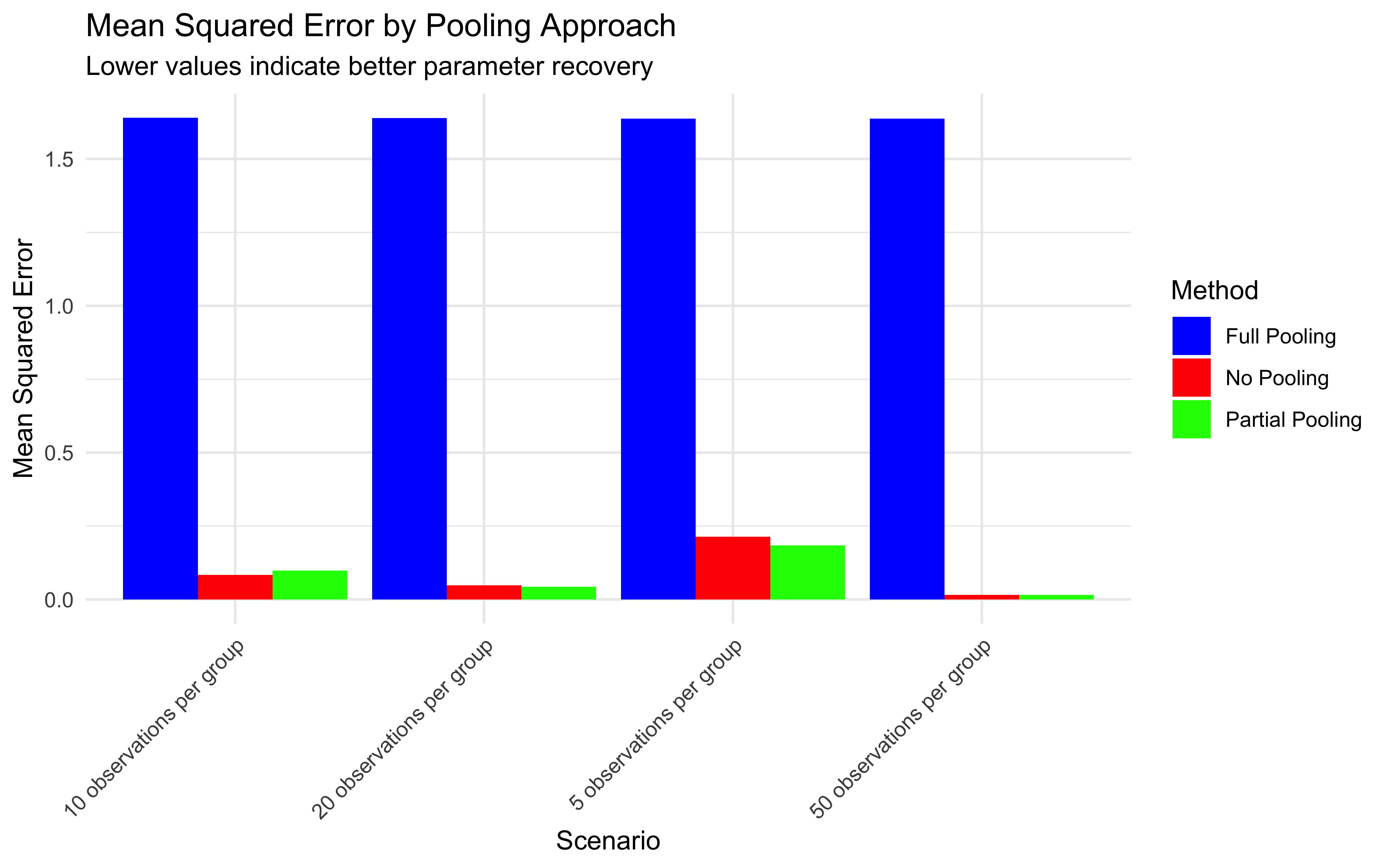

Traditional approaches to handling individual differences often force a choice between two extremes:

7.3.1 Complete Pooling

Treats all participants as identical by averaging or combining their data

Estimates a single set of parameters for the entire group

Ignores individual differences entirely

Example: Fitting a single model to all participants’ data combined

7.3.2 No Pooling

Analyzes each participant completely separately

Estimates separate parameters for each individual

Fails to leverage information shared across participants and can lead to unstable estimates

Example: Fitting separate models to each participant’s data

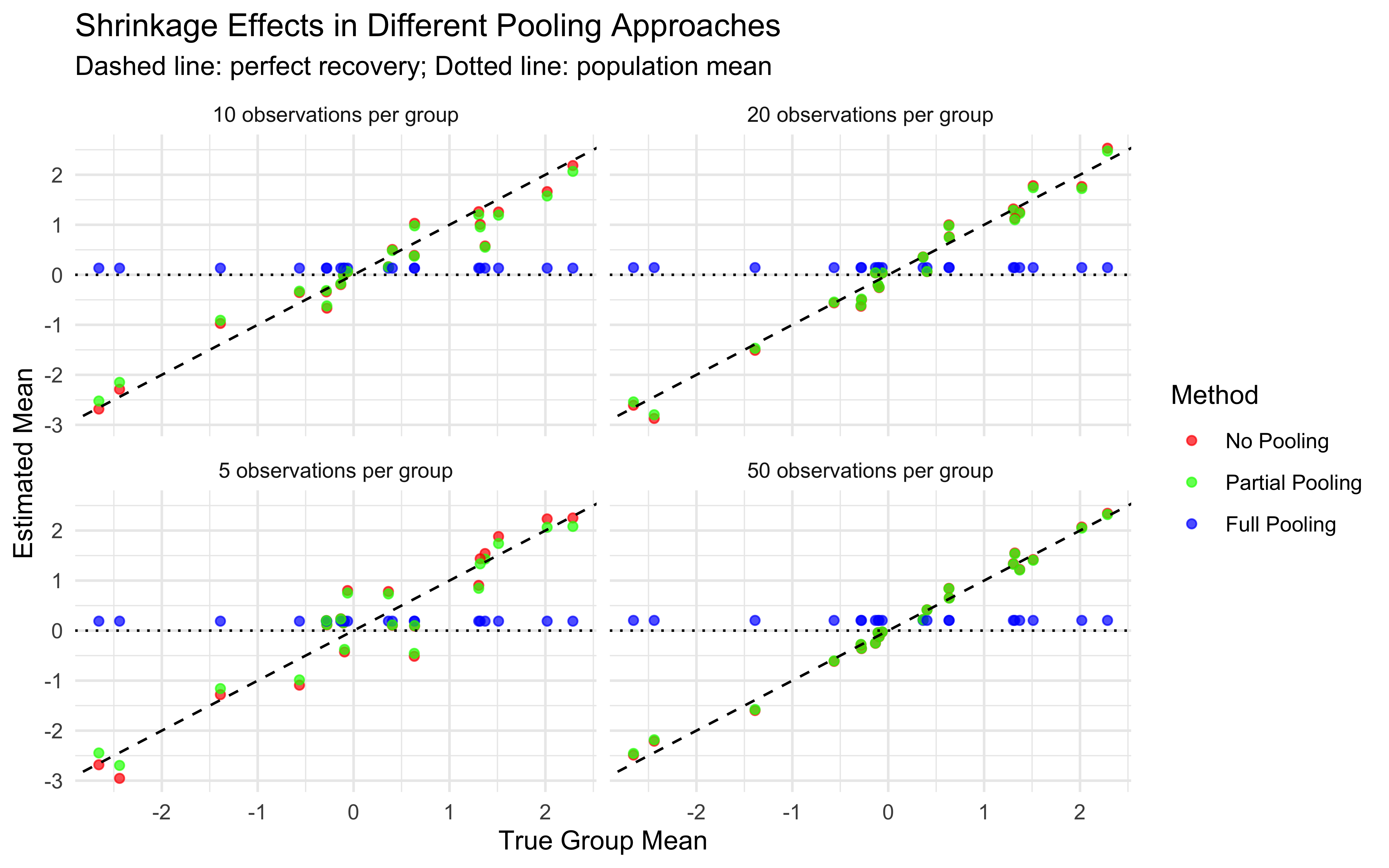

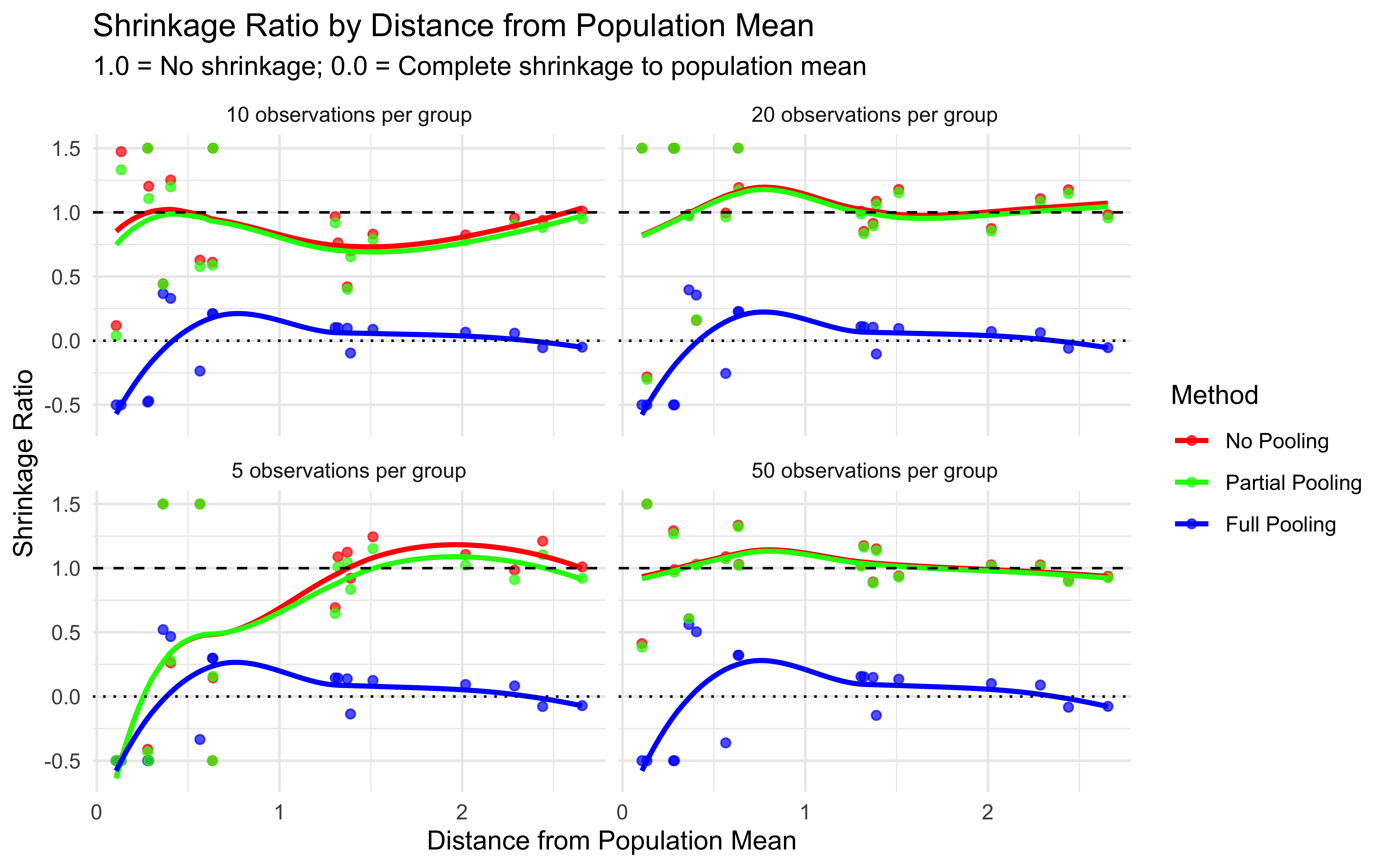

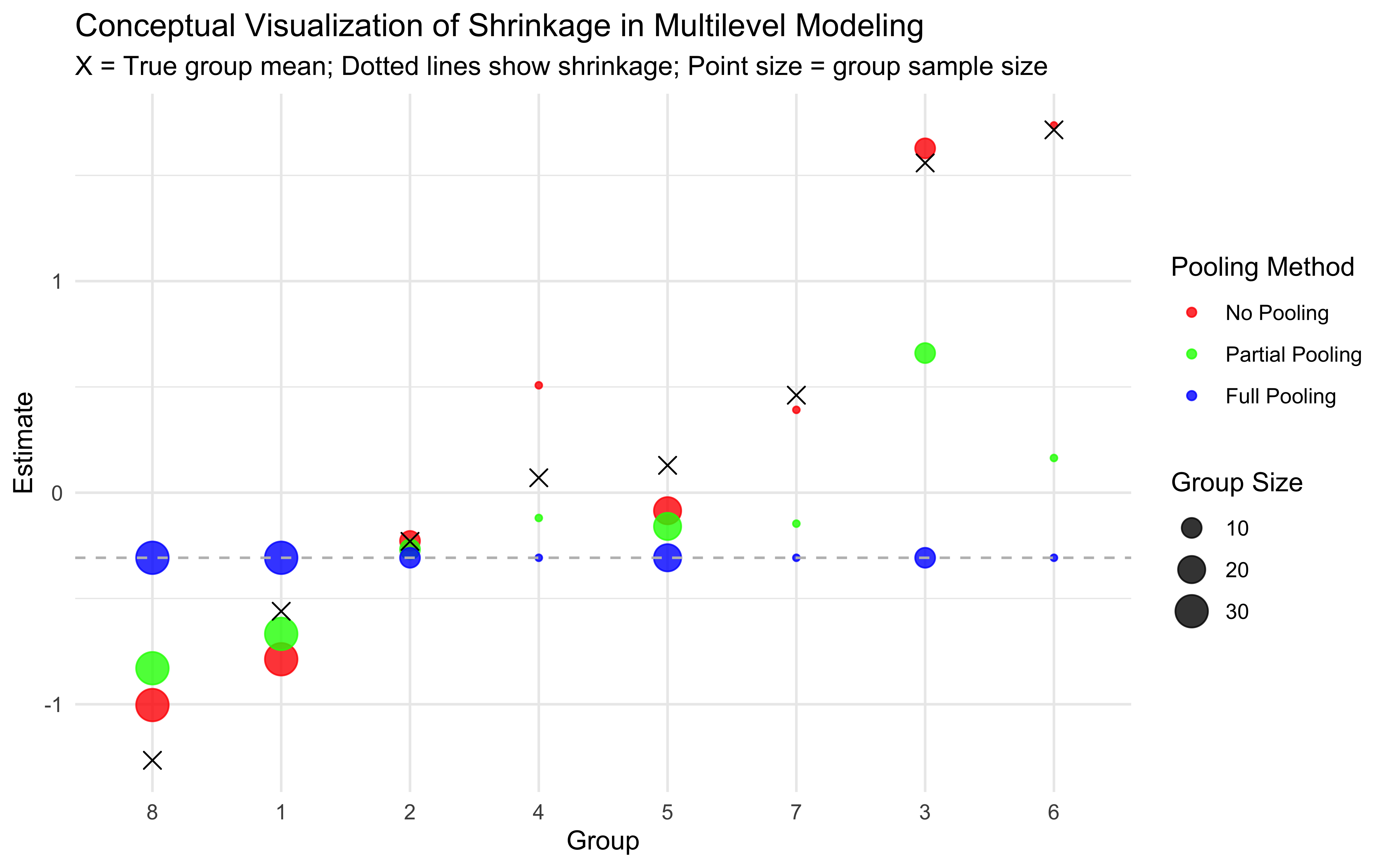

Multilevel modeling offers a middle ground through partial pooling. Individual estimates are informed by both individual-level data and the overall population distribution.

7.3.3 Partial Pooling (Multilevel Modeling)

Individual parameters are treated as coming from a group-level distribution

Estimates are informed by both individual data and the population distribution

Creates a balance between individual and group information

Example: Hierarchical Bayesian model with parameters at both individual and group levels

This partial pooling approach is particularly valuable when:

Data per individual is limited (e.g., few trials per participant)

Individual differences are meaningful but not completely independent

We want to make predictions about new individuals from the same population

7.4 Graphical Model Visualization

Before diving into code, let’s understand the structure of our multilevel models using graphical model notation. These diagrams help visualize how parameters relate to each other and to the observed data.

7.4.1 Biased Agent Model

In this model, each agent has an individual bias parameter (θ) that determines their probability of choosing “right” (1) versus “left” (0). We are now conceptualizing our agents as being part of (sampled from) a more general population. This general population is characterized by a population level average parameter value (e.g. a general bias of 0.8 as we all like right hands more) and a certain variation in the population (e.g. a standard deviation of 0.1, as we are all a bit different from each other). Each biased agent’s bias is then sampled from that distribution.

The key elements are:

Population parameters: μ_θ (mean bias) and σ_θ (standard deviation of bias)

Individual parameters: θ_i (bias for agent i)

Observed data: y_it (choice for agent i on trial t)

7.4.2 Memory Agent Model

This model is more complex, with each agent having two parameters: a baseline bias (α) and a memory sensitivity parameter (β).

The key elements are:

Population parameters: μ_α, σ_α, μ_β, σ_β (means and standard deviations)

Individual parameters: α_i (bias for agent i), β_i (memory sensitivity for agent i)

Transformed variables: m_it (memory state for agent i on trial t)

Observed data: y_it (choice for agent i on trial t)

These graphical models help us understand how information flows in our models and guide our implementation in Stan.

Again, it’s practical to work in log odds. Why? Well, it’s not unconceivable that an agent would be 3 sd from the mean. So a biased agent could have a rate of 0.8 + 3 * 0.1, which gives a rate of 1.1. It’s kinda impossible to choose 110% of the time the right hand. We want an easy way to avoid these situations without too carefully tweaking our parameters, or including exception statements (e.g. if rate > 1, then rate = 1). Conversion to log odds is again a wonderful way to work in a boundless space, and in the last step shrinking everything back to 0-1 probability space.

N.B. we model all agents with some added noise as we assume it cannot be eliminated from empirical studies.

pacman::p_load(tidyverse,

here,

posterior,

cmdstanr,

brms,

tidybayes,

patchwork,

bayesplot,

furrr,

LaplacesDemon)

# Population-level parameters

agents <- 100 # Number of agents to simulate

trials <- 120 # Number of trials per agent

noise <- 0 # Base noise level (probability of random choice)

# Biased agent population parameters

rateM <- 1.386 # Population mean of bias (log-odds scale, ~0.8 in probability)

rateSD <- 0.65 # Population SD of bias (log-odds scale, ~0.1 in probability)

# Memory agent population parameters

biasM <- 0 # Population mean of baseline bias (log-odds scale)

biasSD <- 0.1 # Population SD of baseline bias (log-odds scale)

betaM <- 1.5 # Population mean of memory sensitivity (log-odds scale)

betaSD <- 0.3 # Population SD of memory sensitivity (log-odds scale)

# For reference, convert log-odds parameters to probability scale

cat("Biased agent population mean (probability scale):",

round(plogis(rateM), 2), "\n")## Biased agent population mean (probability scale): 0.8## Approximate biased agent population SD (probability scale): 0.1# Random agent function: makes choices based on bias parameter

# Parameters:

# rate: log-odds of choosing option 1 ("right")

# noise: probability of making a random choice

# Returns: binary choice (0 or 1)

RandomAgentNoise_f <- function(rate, noise) {

# Generate choice based on agent's bias parameter (on log-odds scale)

choice <- rbinom(1, 1, plogis(rate))

# With probability 'noise', override choice with random 50/50 selection

if (rbinom(1, 1, noise) == 1) {

choice = rbinom(1, 1, 0.5)

}

return(choice)

}

# Memory agent function: makes choices based on opponent's historical choices

# Parameters:

# bias: baseline tendency to choose option 1 (log-odds scale)

# beta: sensitivity to memory (how strongly past choices affect decisions)

# otherRate: opponent's observed rate of choosing option 1 (probability scale)

# noise: probability of making a random choice

# Returns: binary choice (0 or 1)

MemoryAgentNoise_f <- function(bias, beta, otherRate, noise) {

# Calculate choice probability based on memory of opponent's choices

# Higher beta means agent responds more strongly to opponent's pattern

choice_prob <- inv_logit_scaled(bias + beta * logit_scaled(otherRate))

# Generate choice

choice <- rbinom(1, 1, choice_prob)

# With probability 'noise', override choice with random 50/50 selection

if (rbinom(1, 1, noise) == 1) {

choice = rbinom(1, 1, 0.5)

}

return(choice)

}7.5 Generating the agents

[MISSING: PARALLELIZE]

# Function to simulate one agent's behavior

simulate_agent <- function(agent_id, population_params, n_trials, noise_level) {

# Sample agent-specific parameters from population distributions

rate <- rnorm(1, population_params$rateM, population_params$rateSD)

bias <- rnorm(1, population_params$biasM, population_params$biasSD)

beta <- rnorm(1, population_params$betaM, population_params$betaSD)

# Initialize choice vectors

randomChoice <- rep(NA, n_trials)

memoryChoice <- rep(NA, n_trials)

# Generate choices for each trial

for (trial in 1:n_trials) {

# Random agent makes choice based on bias parameter

randomChoice[trial] <- RandomAgentNoise_f(rate, noise_level)

# Memory agent uses history of random agent's choices

if (trial == 1) {

# First trial: no history, so use 50/50 chance

memoryChoice[trial] <- rbinom(1, 1, 0.5)

} else {

# Later trials: use memory of previous random agent choices

memoryChoice[trial] <- MemoryAgentNoise_f(

bias,

beta,

mean(randomChoice[1:trial], na.rm = TRUE), # Current memory

noise_level

)

}

}

# Create tibble with all agent data

return(tibble(

agent = agent_id,

trial = seq(n_trials),

randomChoice,

trueRate = rate, # Store true parameter values for later validation

memoryChoice,

noise = noise_level,

rateM = population_params$rateM,

rateSD = population_params$rateSD,

bias = bias,

beta = beta,

biasM = population_params$biasM,

biasSD = population_params$biasSD,

betaM = population_params$betaM,

betaSD = population_params$betaSD

))

}

# Population parameters bundled in a list

population_params <- list(

rateM = rateM,

rateSD = rateSD,

biasM = biasM,

biasSD = biasSD,

betaM = betaM,

betaSD = betaSD

)

# Simulate all agents (in a real application, consider using purrr::map functions)

d <- NULL

for (agent_id in 1:agents) {

agent_data <- simulate_agent(agent_id, population_params, trials, noise)

if (agent_id == 1) {

d <- agent_data

} else {

d <- rbind(d, agent_data)

}

}

# Calculate running statistics for each agent

d <- d %>% group_by(agent) %>% mutate(

# Cumulative proportions of choices (shows learning/strategy over time)

randomRate = cumsum(randomChoice) / seq_along(randomChoice),

memoryRate = cumsum(memoryChoice) / seq_along(memoryChoice)

)

# Display information about the simulated dataset

cat("Generated data for", agents, "agents with", trials, "trials each\n")## Generated data for 100 agents with 120 trials each## Total observations: 12000## # A tibble: 5 × 16

## # Groups: agent [1]

## agent trial randomChoice trueRate memoryChoice noise rateM rateSD bias beta biasM

## <int> <int> <int> <dbl> <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 1 1 1.69 1 0 1.39 0.65 -0.0129 1.47 0

## 2 1 2 1 1.69 1 0 1.39 0.65 -0.0129 1.47 0

## 3 1 3 1 1.69 1 0 1.39 0.65 -0.0129 1.47 0

## 4 1 4 1 1.69 1 0 1.39 0.65 -0.0129 1.47 0

## 5 1 5 1 1.69 1 0 1.39 0.65 -0.0129 1.47 0

## # ℹ 5 more variables: biasSD <dbl>, betaM <dbl>, betaSD <dbl>, randomRate <dbl>,

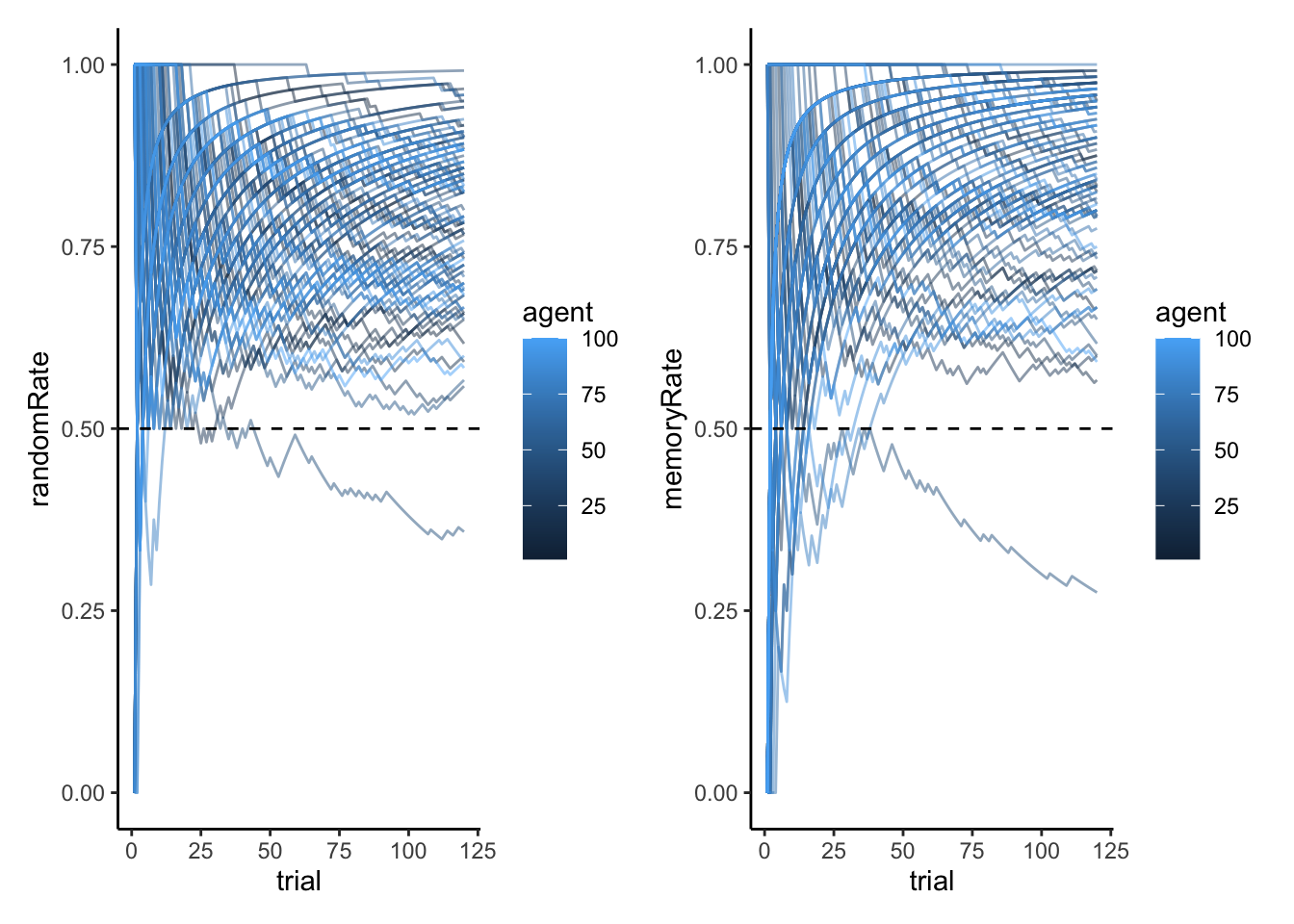

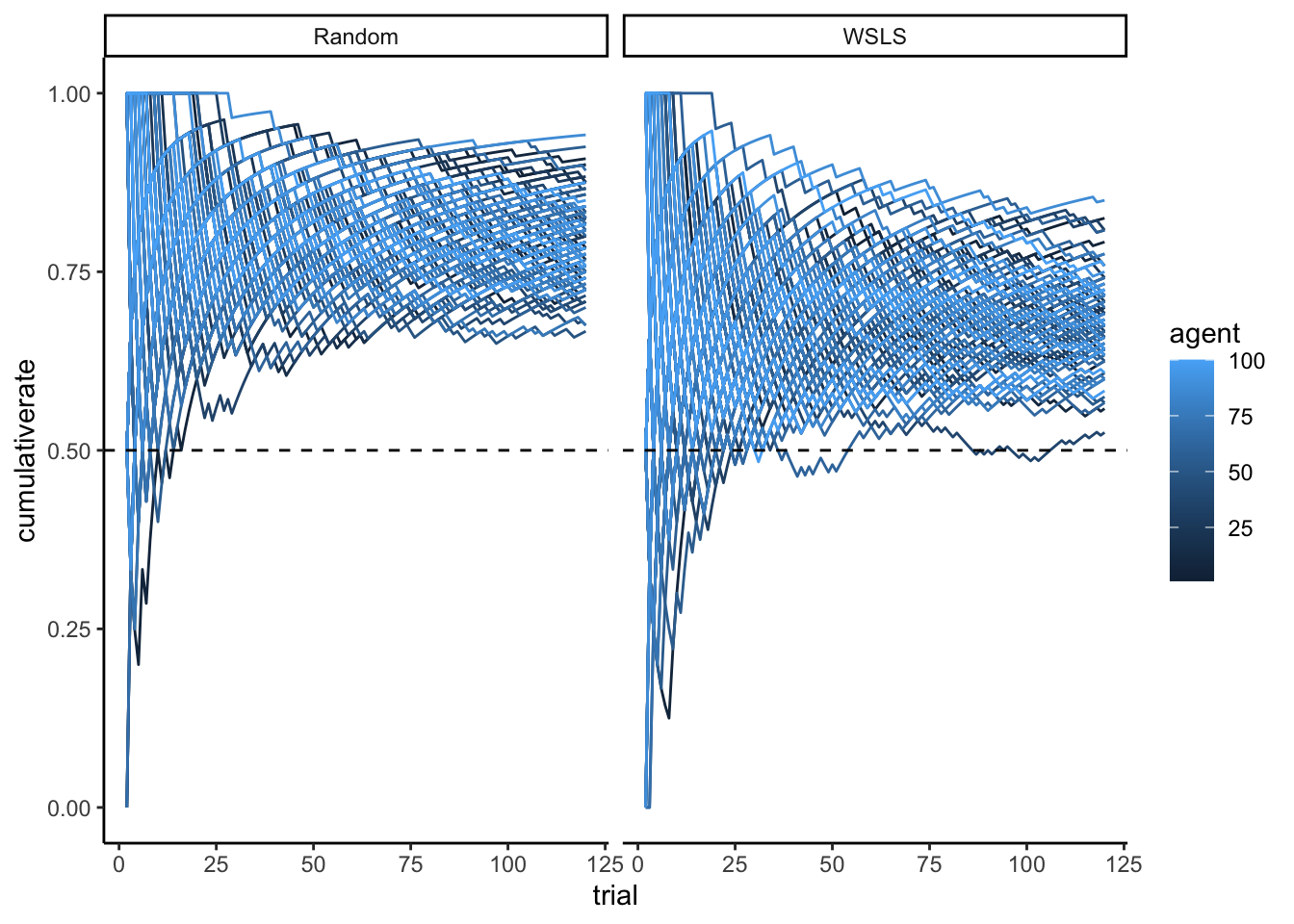

## # memoryRate <dbl>7.6 Plotting the agents

# Create plot themes that we'll reuse

custom_theme <- theme_classic() +

theme(

legend.position = "top",

plot.title = element_text(hjust = 0.5),

plot.subtitle = element_text(hjust = 0.5, size = 9)

)

# Plot 1: Trajectories of randomRate for all agents

p1 <- ggplot(d, aes(x = trial, y = randomRate)) +

geom_line(aes(group = agent, color = "Individual Agents"), alpha = 0.25) + # Individual agents

geom_smooth(aes(color = "Average"), se = TRUE, size = 1.2) + # Group average

geom_hline(yintercept = 0.5, linetype = "dashed") +

ylim(0, 1) +

labs(

title = "Random Agent Behavior",

subtitle = "Cumulative proportion of 'right' choices over trials",

x = "Trial Number",

y = "Proportion of Right Choices",

color = NULL

) +

scale_color_manual(values = c("Individual Agents" = "gray50", "Average" = "blue")) +

custom_theme

# Plot 2: Trajectories of memoryRate for all agents

p2 <- ggplot(d, aes(x = trial, y = memoryRate)) +

geom_line(alpha = 0.15, aes(color = "Individual Agents", group = agent)) + # Individual agents

geom_smooth(aes(color = "Average"), se = TRUE, size = 1.2) + # Group average

geom_hline(yintercept = 0.5, linetype = "dashed") +

ylim(0, 1) +

labs(

title = "Memory Agent Behavior",

subtitle = "Cumulative proportion of 'right' choices over trials",

x = "Trial Number",

y = "Proportion of Right Choices",

color = NULL

) +

scale_color_manual(values = c("Individual Agents" = "gray50", "Average" = "darkred")) +

custom_theme

# Display plots side by side

p1 + p2

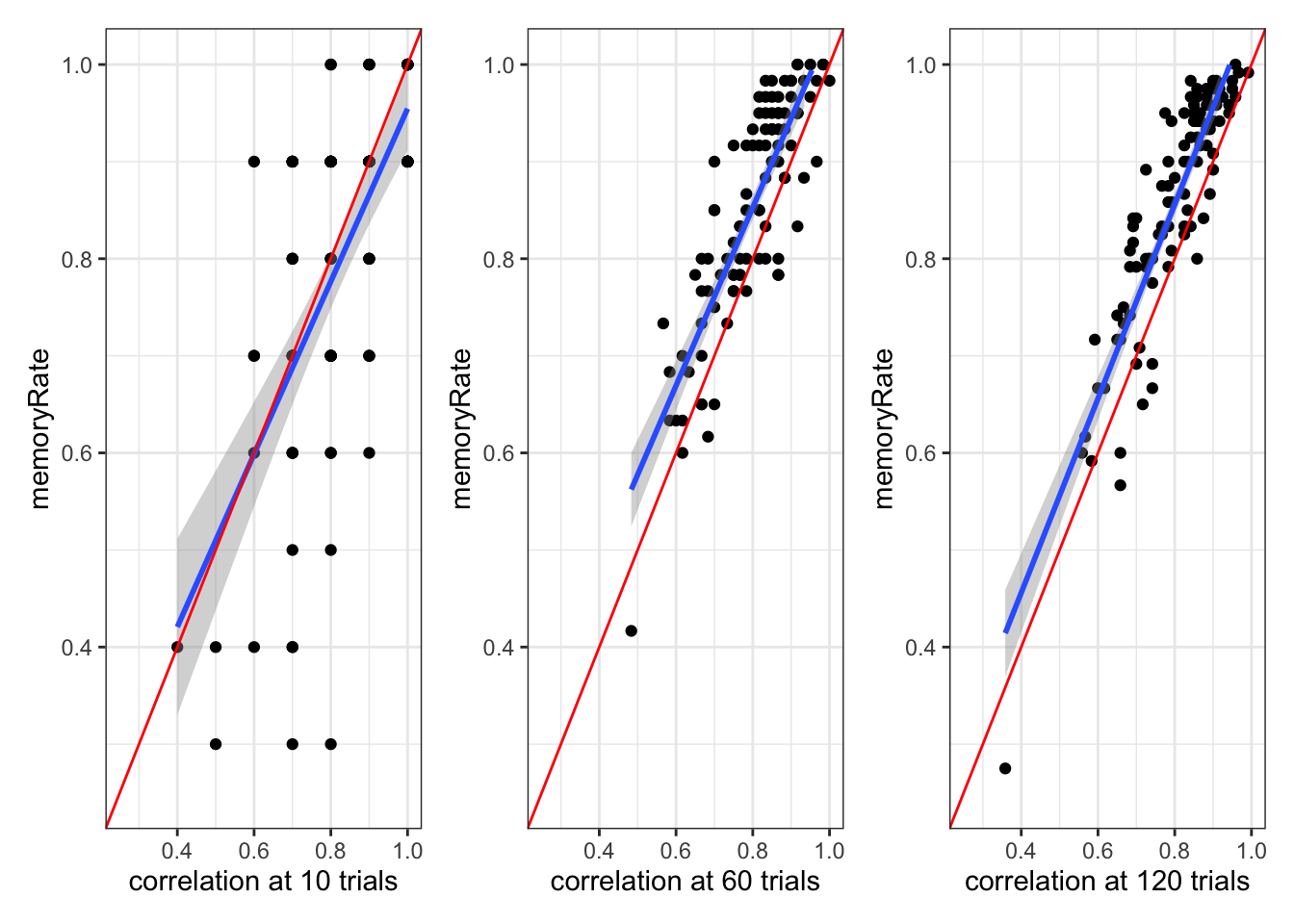

# Plot 3-5: Correlation between random and memory agent behavior at different timepoints

# These show how well memory agents track random agents' behavior over time

p3 <- d %>%

filter(trial == 10) %>%

ggplot(aes(randomRate, memoryRate)) +

geom_point(alpha = 0.6) +

geom_smooth(method = "lm", color = "blue") +

geom_abline(intercept = 0, slope = 1, color = "red", linetype = "dashed") +

coord_fixed(xlim = c(0, 1), ylim = c(0, 1)) +

labs(

title = "After 10 Trials",

x = "Random Agent Rate",

y = "Memory Agent Rate"

) +

custom_theme

p4 <- d %>%

filter(trial == 60) %>%

ggplot(aes(randomRate, memoryRate)) +

geom_point(alpha = 0.6) +

geom_smooth(method = "lm", color = "blue") +

geom_abline(intercept = 0, slope = 1, color = "red", linetype = "dashed") +

coord_fixed(xlim = c(0, 1), ylim = c(0, 1)) +

labs(

title = "After 60 Trials",

x = "Random Agent Rate",

y = "Memory Agent Rate"

) +

custom_theme

p5 <- d %>%

filter(trial == 120) %>%

ggplot(aes(randomRate, memoryRate)) +

geom_point(alpha = 0.6) +

geom_smooth(method = "lm", color = "blue") +

geom_abline(intercept = 0, slope = 1, color = "red", linetype = "dashed") +

coord_fixed(xlim = c(0, 1), ylim = c(0, 1)) +

labs(

title = "After 120 Trials",

x = "Random Agent Rate",

y = "Memory Agent Rate"

) +

custom_theme

# Display plots in a single row

p3 + p4 + p5 + plot_layout(guides = "collect") +

plot_annotation(

title = "Memory Agents' Adaptation to Random Agents Over Time",

subtitle = "Red line: perfect tracking; Blue line: actual relationship",

theme = theme(plot.title = element_text(hjust = 0.5),

plot.subtitle = element_text(hjust = 0.5))

)

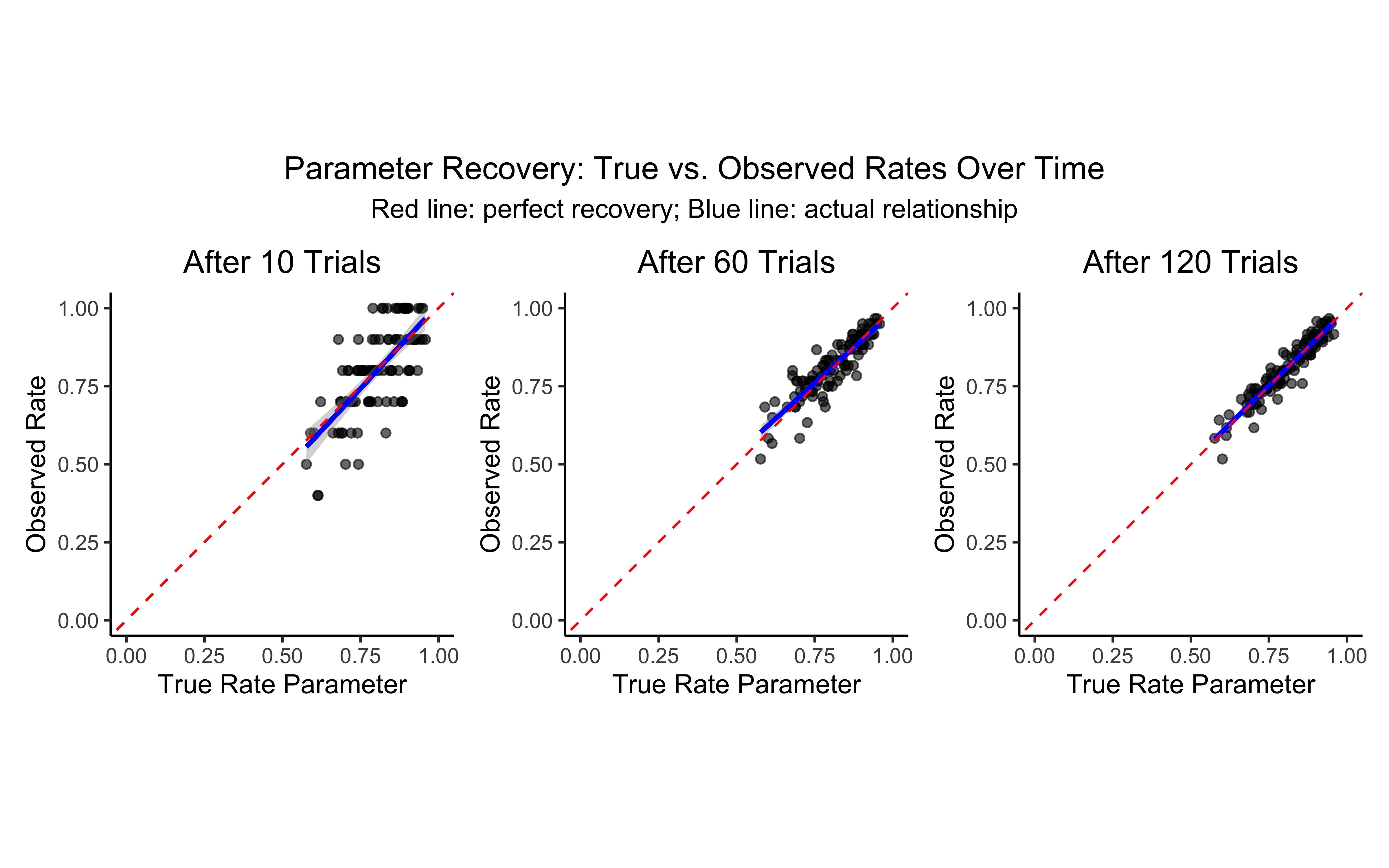

# Plot 6-8: Correlation between true rate parameter and observed rate

# These show how well we can recover the underlying rate parameter

p6 <- d %>%

filter(trial == 10) %>%

ggplot(aes(inv_logit_scaled(trueRate), randomRate)) +

geom_point(alpha = 0.6) +

geom_smooth(method = "lm", color = "blue") +

geom_abline(intercept = 0, slope = 1, color = "red", linetype = "dashed") +

coord_fixed(xlim = c(0, 1), ylim = c(0, 1)) +

labs(

title = "After 10 Trials",

x = "True Rate Parameter",

y = "Observed Rate"

) +

custom_theme

p7 <- d %>%

filter(trial == 60) %>%

ggplot(aes(inv_logit_scaled(trueRate), randomRate)) +

geom_point(alpha = 0.6) +

geom_smooth(method = "lm", color = "blue") +

geom_abline(intercept = 0, slope = 1, color = "red", linetype = "dashed") +

coord_fixed(xlim = c(0, 1), ylim = c(0, 1)) +

labs(

title = "After 60 Trials",

x = "True Rate Parameter",

y = "Observed Rate"

) +

custom_theme

p8 <- d %>%

filter(trial == 120) %>%

ggplot(aes(inv_logit_scaled(trueRate), randomRate)) +

geom_point(alpha = 0.6) +

geom_smooth(method = "lm", color = "blue") +

geom_abline(intercept = 0, slope = 1, color = "red", linetype = "dashed") +

coord_fixed(xlim = c(0, 1), ylim = c(0, 1)) +

labs(

title = "After 120 Trials",

x = "True Rate Parameter",

y = "Observed Rate"

) +

custom_theme

# Display plots in a single row

p6 + p7 + p8 + plot_layout(guides = "collect") +

plot_annotation(

title = "Parameter Recovery: True vs. Observed Rates Over Time",

subtitle = "Red line: perfect recovery; Blue line: actual relationship",

theme = theme(plot.title = element_text(hjust = 0.5),

plot.subtitle = element_text(hjust = 0.5))

)

Note that as the n of trials increases, the memory model matches the random model better and better

7.7 Coding the multilevel agents

7.7.1 Multilevel random

Remember that the simulated parameters are: * biasM <- 0 * biasSD <- 0.1 * betaM <- 1.5 * betaSD <- 0.3

Prep the data

# For multilevel models, we need to reshape our data into matrices

# where rows are trials and columns are agents

# Function to create matrices from our long-format data

create_stan_data <- function(data, agent_type) {

# Select relevant choice column based on agent type

choice_col <- ifelse(agent_type == "random", "randomChoice", "memoryChoice")

other_col <- ifelse(agent_type == "random", "memoryChoice", "randomChoice")

# Create choice matrix

choice_data <- data %>%

dplyr::select(agent, trial, all_of(choice_col)) %>%

pivot_wider(

names_from = agent,

values_from = all_of(choice_col),

names_prefix = "agent_"

) %>%

dplyr::select(-trial) %>%

as.matrix()

# Create other-choice matrix (used for memory agent)

other_data <- data %>%

dplyr::select(agent, trial, all_of(other_col)) %>%

pivot_wider(

names_from = agent,

values_from = all_of(other_col),

names_prefix = "agent_"

) %>%

dplyr::select(-trial) %>%

as.matrix()

# Return data as a list ready for Stan

return(list(

trials = trials,

agents = agents,

h = choice_data,

other = other_data

))

}

# Create data for random agent model

data_random <- create_stan_data(d, "random")

# Create data for memory agent model

data_memory <- create_stan_data(d, "memory")

# Display dimensions of our data matrices

cat("Random agent matrix dimensions:", dim(data_random$h), "\n")## Random agent matrix dimensions: 120 100## Memory agent matrix dimensions: 120 1007.8 Multilevel Random Agent Model

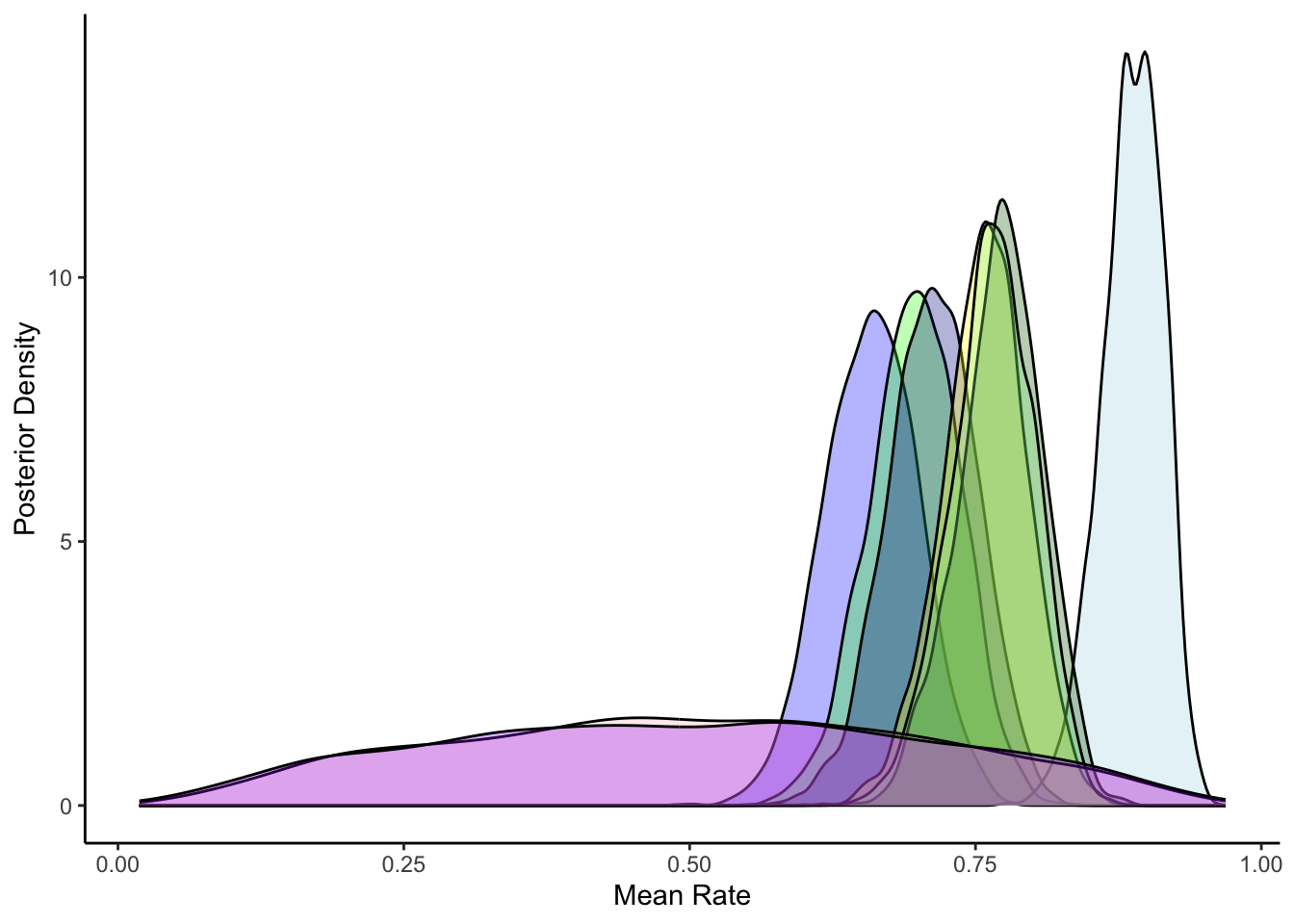

Our first multilevel model focuses on the biased random agent. For each agent, we’ll estimate an individual bias parameter (theta) that determines their probability of choosing “right” versus “left”. These individual parameters will be modeled as coming from a population distribution with mean thetaM and standard deviation thetaSD.

This approach balances two sources of information: 1. The agent’s individual choice patterns 2. The overall population distribution of bias parameters

The model implements the following hierarchical structure:

Population level: θᵐ ~ Normal(0, 1), θˢᵈ ~ Normal⁺(0, 0.3)

Individual level: θᵢ ~ Normal(θᵐ, θˢᵈ)

Data level: yᵢₜ ~ Bernoulli(logit⁻¹(θᵢ))

Let’s implement this in Stan:

# Stan model for multilevel random agent

stan_model <- "

/* Multilevel Bernoulli Model

* This model infers agent-specific choice biases from sequences of binary choices (0/1)

* The model assumes each agent has their own bias (theta) drawn from a population distribution

*/

functions {

// Generate random numbers from truncated normal distribution

real normal_lb_rng(real mu, real sigma, real lb) {

real p = normal_cdf(lb | mu, sigma); // cdf for bounds

real u = uniform_rng(p, 1);

return (sigma * inv_Phi(u)) + mu; // inverse cdf for value

}

}

data {

int<lower=1> trials; // Number of trials per agent

int<lower=1> agents; // Number of agents

array[trials, agents] int<lower=0, upper=1> h; // Choice data: 0 or 1 for each trial/agent

}

parameters {

real thetaM; // Population-level mean bias (log-odds scale)

real<lower=0> thetaSD; // Population-level SD of bias

array[agents] real theta; // Agent-specific biases (log-odds scale)

}

model {

// Population-level priors

target += normal_lpdf(thetaM | 0, 1); // Prior for population mean

target += normal_lpdf(thetaSD | 0, 0.3) // Half-normal prior for population SD

- normal_lccdf(0 | 0, 0.3); // Adjustment for truncation at 0

// Agent-level model

target += normal_lpdf(theta | thetaM, thetaSD); // Agent biases drawn from population

// Likelihood for observed choices

for (i in 1:agents) {

target += bernoulli_logit_lpmf(h[,i] | theta[i]); // Choice likelihood

}

}

generated quantities {

// Prior predictive samples

real thetaM_prior = normal_rng(0, 1);

real<lower=0> thetaSD_prior = normal_lb_rng(0, 0.3, 0);

real<lower=0, upper=1> theta_prior = inv_logit(normal_rng(thetaM_prior, thetaSD_prior));

// Posterior predictive samples

real<lower=0, upper=1> theta_posterior = inv_logit(normal_rng(thetaM, thetaSD));

// Predictive simulations

int<lower=0, upper=trials> prior_preds = binomial_rng(trials, inv_logit(thetaM_prior));

int<lower=0, upper=trials> posterior_preds = binomial_rng(trials, inv_logit(thetaM));

// Convert parameters to probability scale for easier interpretation

real<lower=0, upper=1> thetaM_prob = inv_logit(thetaM);

}

"

write_stan_file(

stan_model,

dir = "stan/",

basename = "W6_MultilevelBias.stan")## [1] "/Users/au209589/Dropbox/Teaching/AdvancedCognitiveModeling23_book/stan/W6_MultilevelBias.stan"# File path for saved model

model_file <- "simmodels/W6_MultilevelBias.RDS"

# Check if we need to rerun the simulation

if (regenerate_simulations || !file.exists(model_file)) {

file <- file.path("stan/W6_MultilevelBias.stan")

mod <- cmdstan_model(file,

cpp_options = list(stan_threads = TRUE),

stanc_options = list("O1"))

# Check if we need to rerun the simulation

samples <- mod$sample(

data = data_random,

seed = 123,

chains = 2,

parallel_chains = 2,

threads_per_chain = 2,

iter_warmup = 2000,

iter_sampling = 2000,

refresh = 500,

max_treedepth = 20,

adapt_delta = 0.99,

)

samples$save_object(file = model_file)

cat("Generated new model fit and saved to", model_file, "\n")

} else {

# Load existing results

samples <- readRDS(model_file)

cat("Loaded existing model fit from", model_file, "\n")

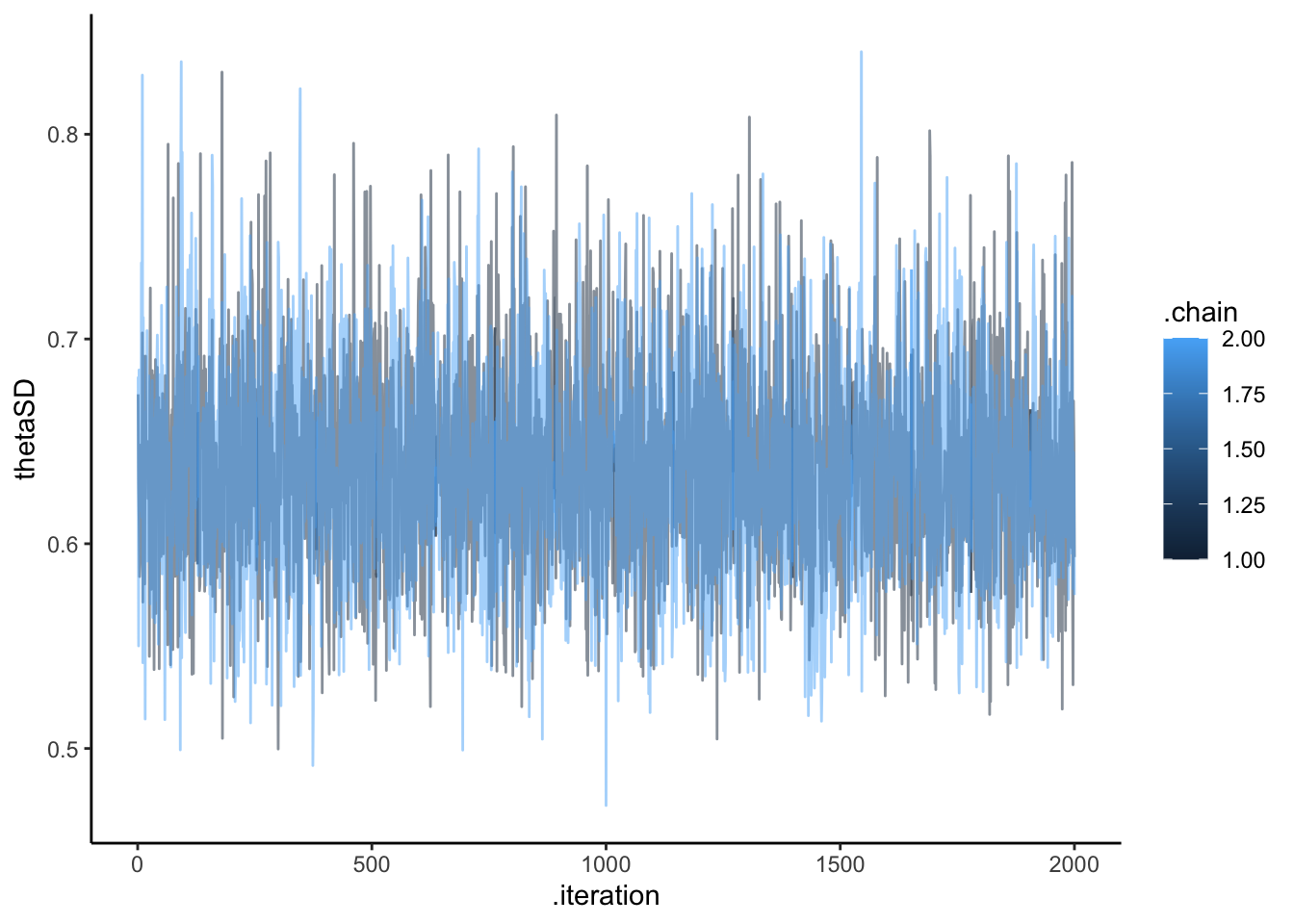

}## Loaded existing model fit from simmodels/W6_MultilevelBias.RDS7.8.1 Assessing multilevel random agents

Besides the usual prior predictive checks, prior posterior update checks, posterior predictive checks, based on the population level estimates; we also want to plot at least a few of the single agents to assess how well the model is doing for them.

[MISSING: PLOT MODEL ESTIMATES AGAINST N OF HEADS BY PARTICIPANT]

# Load the model results

samples <- readRDS("simmodels/W6_MultilevelBias.RDS")

# Display summary statistics for key parameters

samples$summary(c("thetaM", "thetaSD", "thetaM_prob")) ## # A tibble: 3 × 10

## variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 thetaM 1.35 1.35 0.0677 0.0673 1.24 1.46 1.00 5172. 3042.

## 2 thetaSD 0.638 0.635 0.0516 0.0510 0.559 0.727 1.00 3636. 2796.

## 3 thetaM_prob 0.793 0.794 0.0111 0.0110 0.775 0.811 1.00 5172. 3042.# Extract posterior draws for analysis

draws_df <- as_draws_df(samples$draws())

# Create a function for standard diagnostic plots

plot_diagnostics <- function(parameter_name, true_value = NULL,

prior_name = paste0(parameter_name, "_prior")) {

# Trace plot to check mixing and convergence

p1 <- ggplot(draws_df, aes(.iteration, .data[[parameter_name]],

group = .chain, color = as.factor(.chain))) +

geom_line(alpha = 0.5) +

labs(title = paste("Trace Plot for", parameter_name),

x = "Iteration", y = parameter_name, color = "Chain") +

theme_classic()

# Prior-posterior update plot

p2 <- ggplot(draws_df) +

geom_histogram(aes(.data[[parameter_name]]), fill = "blue", alpha = 0.3) +

geom_histogram(aes(.data[[prior_name]]), fill = "red", alpha = 0.3)

# Add true value if provided

if (!is.null(true_value)) {

p2 <- p2 + geom_vline(xintercept = true_value, linetype = "dashed", size = 1)

}

p2 <- p2 +

labs(title = paste("Prior-Posterior Update for", parameter_name),

subtitle = "Blue: posterior, Red: prior, Dashed: true value",

x = parameter_name, y = "Density") +

theme_classic()

# Return both plots

return(p1 + p2)

}

# Plot diagnostics for population mean

pop_mean_plots <- plot_diagnostics("thetaM", rateM)

# Plot diagnostics for population SD

pop_sd_plots <- plot_diagnostics("thetaSD", rateSD)

# Display diagnostic plots

pop_mean_plots

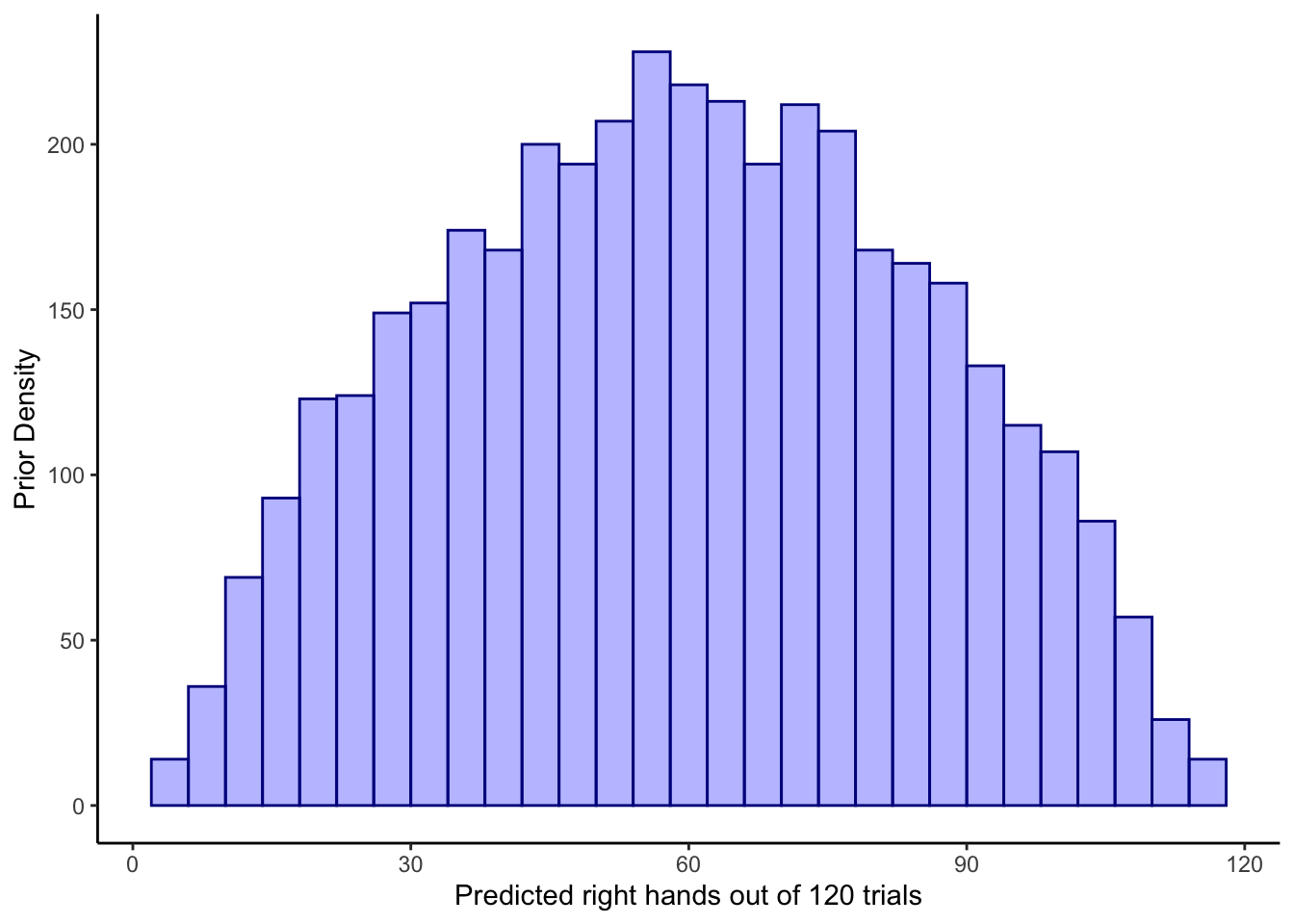

# Create predictive check plots

# Prior predictive check

p1 <- ggplot(draws_df) +

geom_histogram(aes(prior_preds),

bins = 30, fill = "blue", alpha = 0.3,

color = "darkblue") +

labs(title = "Prior Predictive Check",

subtitle = "Distribution of predicted 'right' choices out of 120 trials",

x = "Number of Right Choices", y = "Count") +

theme_classic()

# Posterior predictive check

p2 <- ggplot(draws_df) +

geom_histogram(aes(posterior_preds),

bins = 30, fill = "green", alpha = 0.3,

color = "darkgreen") +

geom_histogram(aes(prior_preds),

bins = 30, fill = "blue", alpha = 0.1,

color = "darkblue") +

labs(title = "Prior vs Posterior Predictive Check",

subtitle = "Green: posterior predictions, Blue: prior predictions",

x = "Number of Right Choices", y = "Count") +

theme_classic()

# Average observed choices per agent

agent_means <- colMeans(data_random$h)

observed_counts <- agent_means * trials

# Add observed counts to posterior predictive plot

p3 <- ggplot(draws_df) +

geom_histogram(aes(posterior_preds),

bins = 30, fill = "green", alpha = 0.3,

color = "darkgreen") +

geom_histogram(data = tibble(observed = observed_counts),

aes(observed), bins = 30, fill = "red", alpha = 0.3,

color = "darkred") +

labs(title = "Posterior Predictions vs Observed Data",

subtitle = "Green: posterior predictions, Red: actual observed counts",

x = "Number of Right Choices", y = "Count") +

theme_classic()

# Display predictive check plots

p1 + p2 + p3

7.9 Let’s look at individuals

# Extract individual parameter estimates

# Sample 10 random agents to examine more closely

sample_agents <- sample(1:agents, 10)

# Extract posterior samples for each agent's theta parameter

theta_samples <- matrix(NA, nrow = nrow(draws_df), ncol = agents)

for (a in 1:agents) {

# Convert from log-odds to probability scale for ease of interpretation

theta_samples[, a] <- inv_logit_scaled(draws_df[[paste0("theta[", a, "]")]])

}

# Calculate true rates

dddd <- unique(d[,c("agent", "trueRate")]) %>%

mutate(trueRate = inv_logit_scaled(trueRate))

# Create a dataframe with the true rates and empirical rates

agent_comparison <- tibble(

agent = 1:agents,

true_rate = dddd$trueRate, # True rate used in simulation

empirical_rate = colMeans(data_random$h) # Observed proportion of right choices

)

# Calculate summary statistics for posterior estimates

theta_summaries <- tibble(

agent = 1:agents,

mean = colMeans(theta_samples),

lower_95 = apply(theta_samples, 2, quantile, 0.025),

upper_95 = apply(theta_samples, 2, quantile, 0.975)

) %>%

# Join with true values for comparison

left_join(agent_comparison, by = "agent") %>%

# Calculate error metrics

mutate(

abs_error = abs(mean - true_rate),

in_interval = true_rate >= lower_95 & true_rate <= upper_95,

rel_error = abs_error / true_rate

)

# Plot 1: Posterior distributions for sample agents

posterior_samples <- tibble(

agent = rep(sample_agents, each = nrow(draws_df)),

sample_idx = rep(1:nrow(draws_df), times = length(sample_agents)),

estimated_rate = as.vector(theta_samples[, sample_agents])

)

p1 <- ggplot() +

# Add density plot for posterior distribution of rates

geom_density(data = posterior_samples,

aes(x = estimated_rate, group = agent),

fill = "skyblue", alpha = 0.5) +

# Add vertical line for true rate

geom_vline(data = agent_comparison %>% filter(agent %in% sample_agents),

aes(xintercept = true_rate),

color = "red", size = 1) +

# Add vertical line for empirical rate

geom_vline(data = agent_comparison %>% filter(agent %in% sample_agents),

aes(xintercept = empirical_rate),

color = "green4", size = 1, linetype = "dashed") +

# Facet by agent

facet_wrap(~agent, scales = "free_y") +

# Add formatting

labs(title = "Individual Agent Parameter Estimation",

subtitle = "Blue density: Posterior distribution\nRed line: True rate\nGreen dashed line: Empirical rate",

x = "Rate Parameter (Probability Scale)",

y = "Density") +

theme_classic() +

theme(strip.background = element_rect(fill = "white"),

strip.text = element_text(face = "bold")) +

xlim(0, 1)

# Plot 2: Parameter recovery for all agents

p2 <- ggplot(theta_summaries, aes(x = true_rate, y = mean)) +

geom_point(aes(color = in_interval), size = 3, alpha = 0.7) +

geom_errorbar(aes(ymin = lower_95, ymax = upper_95, color = in_interval),

width = 0.01, alpha = 0.3) +

geom_abline(intercept = 0, slope = 1, linetype = "dashed", color = "black") +

geom_smooth(method = "lm", color = "blue", se = FALSE, linetype = "dotted") +

scale_color_manual(values = c("TRUE" = "darkgreen", "FALSE" = "red")) +

labs(title = "Parameter Recovery Performance",

subtitle = "Each point represents one agent; error bars show 95% credible intervals",

x = "True Rate",

y = "Estimated Rate",

color = "True Value in\n95% Interval") +

theme_classic() +

xlim(0, 1) + ylim(0, 1)

# Calculate parameter estimation metrics

error_metrics <- tibble(

agent = 1:agents,

true_rate = dddd$trueRate,

empirical_rate = colMeans(data_random$h),

estimated_rate = colMeans(theta_samples),

lower_95 = apply(theta_samples, 2, quantile, 0.025),

upper_95 = apply(theta_samples, 2, quantile, 0.975),

absolute_error = abs(estimated_rate - true_rate),

relative_error = absolute_error / true_rate,

in_interval = true_rate >= lower_95 & true_rate <= upper_95

)

# Create direct comparison plot

p3 <- ggplot(error_metrics, aes(x = agent)) +

geom_errorbar(aes(ymin = lower_95, ymax = upper_95), width = 0.2, color = "blue", alpha = 0.5) +

geom_point(aes(y = estimated_rate), color = "blue", size = 3) +

geom_point(aes(y = true_rate), color = "red", shape = 4, size = 3, stroke = 2, alpha = 0.3) +

geom_point(aes(y = empirical_rate), color = "green4", shape = 1, size = 3, stroke = 2, alpha = 0.3) +

labs(title = "Parameter Estimation Accuracy by Agent",

subtitle = "Blue points and bars: Estimated rate with 95% credible interval\nRed X: True rate used in simulation\nGreen circle: Empirical rate from observed data",

x = "Agent ID",

y = "Rate") +

theme_classic()

# Plot 4: Shrinkage visualization

# Calculate population mean estimate

pop_mean <- mean(draws_df$thetaM_prob)

# Add shrinkage information to the data

shrinkage_data <- theta_summaries %>%

mutate(

# Distance from empirical to population mean (shows shrinkage direction)

empirical_to_pop = empirical_rate - pop_mean,

# Distance from estimate to empirical (shows amount of shrinkage)

estimate_to_empirical = mean - empirical_rate,

# Ratio of distances (shrinkage proportion)

shrinkage_ratio = 1 - (abs(mean - pop_mean) / abs(empirical_rate - pop_mean)),

# Define number of trials for sizing points

n_trials = trials

)

p4 <- ggplot(shrinkage_data, aes(x = empirical_rate, y = mean)) +

# Reference line for no shrinkage

geom_abline(intercept = 0, slope = 1, linetype = "dashed", color = "gray50") +

# Horizontal line for population mean

geom_hline(yintercept = pop_mean, linetype = "dotted", color = "blue") +

# Points for each agent

geom_point(aes(size = n_trials, color = abs(shrinkage_ratio)), alpha = 0.7) +

# Connect points to their empirical values to show shrinkage

geom_segment(aes(xend = empirical_rate, yend = empirical_rate,

color = abs(shrinkage_ratio)), alpha = 0.3) +

scale_color_gradient(low = "yellow", high = "red") +

labs(title = "Shrinkage Effects in Multilevel Modeling",

subtitle = "Points above diagonal shrink down, points below shrink up;\nBlue dotted line: population mean estimate",

x = "Empirical Rate (Observed Proportion)",

y = "Posterior Mean Estimate",

color = "Shrinkage\nMagnitude",

size = "Number of\nTrials") +

theme_classic() +

xlim(0, 1) + ylim(0, 1)

# Display plots

p1

7.10 Prior sensitivity checks

# File path for saved sensitivity results

sensitivity_results_file <- "simdata/W6_sensitivity_results.csv"

# Check if we need to rerun the analysis

if (regenerate_simulations || !file.exists(sensitivity_results_file)) {

# Define a range of prior specifications to test

prior_settings <- expand_grid(

prior_mean_theta = c(-1, 0, 1), # Different prior means for population rate

prior_sd_theta = c(0.5, 1, 2), # Different prior SDs for population rate

prior_scale_theta_sd = c(0.1, 0.3, 0.5, 1) # Different scales for the SD hyperprior

)

# First, create a single Stan model that accepts prior hyperparameters as data

stan_code <- "

functions {

real normal_lb_rng(real mu, real sigma, real lb) {

real p = normal_cdf(lb | mu, sigma);

real u = uniform_rng(p, 1);

return (sigma * inv_Phi(u)) + mu;

}

}

data {

int<lower=1> trials;

int<lower=1> agents;

array[trials, agents] int<lower=0, upper=1> h;

real prior_mean_theta;

real<lower=0> prior_sd_theta;

real<lower=0> prior_scale_theta_sd;

}

parameters {

real thetaM;

real<lower=0> thetaSD;

array[agents] real theta;

}

model {

// Population-level priors with specified hyperparameters

target += normal_lpdf(thetaM | prior_mean_theta, prior_sd_theta);

target += normal_lpdf(thetaSD | 0, prior_scale_theta_sd) - normal_lccdf(0 | 0, prior_scale_theta_sd);

// Agent-level model

target += normal_lpdf(theta | thetaM, thetaSD);

// Likelihood

for (i in 1:agents) {

target += bernoulli_logit_lpmf(h[,i] | theta[i]);

}

}

generated quantities {

real thetaM_prob = inv_logit(thetaM);

}

"

# Write model to file

writeLines(stan_code, "stan/sensitivity_model.stan")

# Compile the model once

mod_sensitivity <- cmdstan_model("stan/sensitivity_model.stan",

cpp_options = list(stan_threads = TRUE),

stanc_options = list("O1"))

# Function to fit model with specified priors

fit_with_priors <- function(prior_mean_theta, prior_sd_theta, prior_scale_theta_sd) {

# Create data with prior specifications

data_with_priors <- c(data_random, list(

prior_mean_theta = prior_mean_theta,

prior_sd_theta = prior_sd_theta,

prior_scale_theta_sd = prior_scale_theta_sd

))

# Fit model

fit <- mod_sensitivity$sample(

data = data_with_priors,

seed = 123,

chains = 1,

parallel_chains = 1,

threads_per_chain = 1,

iter_warmup = 1000,

iter_sampling = 1000,

refresh = 0

)

# Extract posterior summaries

summary_df <- fit$summary(c("thetaM", "thetaSD", "thetaM_prob"))

# Return results

return(tibble(

prior_mean_theta = prior_mean_theta,

prior_sd_theta = prior_sd_theta,

prior_scale_theta_sd = prior_scale_theta_sd,

est_thetaM = summary_df$mean[summary_df$variable == "thetaM"],

est_thetaSD = summary_df$mean[summary_df$variable == "thetaSD"],

est_thetaM_prob = summary_df$mean[summary_df$variable == "thetaM_prob"]

))

}

# Run parallel analysis

library(furrr)

plan(multisession, workers = 4) # Using fewer workers to reduce resource use

sensitivity_results <- future_pmap_dfr(

prior_settings,

function(prior_mean_theta, prior_sd_theta, prior_scale_theta_sd) {

fit_with_priors(prior_mean_theta, prior_sd_theta, prior_scale_theta_sd)

},

.options = furrr_options(seed = TRUE)

)

# Save results for future use

write_csv(sensitivity_results, sensitivity_results_file)

cat("Generated new sensitivity analysis results and saved to", sensitivity_results_file, "\n")

} else {

# Load existing results

sensitivity_results <- read_csv(sensitivity_results_file)

cat("Loaded existing sensitivity analysis results from", sensitivity_results_file, "\n")

}## Loaded existing sensitivity analysis results from simdata/W6_sensitivity_results.csv# Plot for population mean estimate

p1 <- ggplot(sensitivity_results,

aes(x = prior_mean_theta,

y = est_thetaM_prob,

color = factor(prior_scale_theta_sd))) +

geom_point(size = 3) +

geom_hline(yintercept = inv_logit_scaled(d$rateM), linetype = "dashed") +

labs(title = "Sensitivity of Population Mean Parameter",

subtitle = "Dashed line shows average empirical rate across participants",

x = "Prior Mean, SD for Population Parameter",

y = "Estimated Population Mean (probability scale)",

color = "Prior Scale for\nPopulation SD") +

theme_classic() +

ylim(0.7, 0.9) +

facet_wrap(.~prior_sd_theta) +

theme(axis.text.x = element_text(angle = 45, hjust = 1))

# Plot for population SD parameter

p2 <- ggplot(sensitivity_results,

aes(x = prior_mean_theta,

y = est_thetaSD,

color = factor(prior_scale_theta_sd))) +

geom_point(size = 3) +

geom_hline(yintercept = d$rateSD, linetype = "dashed") +

labs(title = "Sensitivity of Population Variance Parameter",

x = "Prior Mean, SD for Population Parameter",

y = "Estimated Population SD",

color = "Prior Scale for\nPopulation SD") +

theme_classic() +

ylim(0.5, 0.7) +

facet_wrap(.~prior_sd_theta) +

theme(axis.text.x = element_text(angle = 45, hjust = 1))

# Display plots

p1 / p2

7.11 Parameter recovery

# File path for saved recovery results

recovery_results_file <- "simdata/W6_recovery_results.csv"

# Check if we need to rerun the analysis

if (regenerate_simulations || !file.exists(recovery_results_file)) {

# Function to simulate data and recover parameters

recover_parameters <- function(true_thetaM, true_thetaSD, n_agents, n_trials) {

# Generate agent-specific true rates

agent_thetas <- rnorm(n_agents, true_thetaM, true_thetaSD)

# Generate choice data

sim_data <- matrix(NA, nrow = n_trials, ncol = n_agents)

for (a in 1:n_agents) {

sim_data[,a] <- rbinom(n_trials, 1, inv_logit_scaled(agent_thetas[a]))

}

# Prepare data for Stan

stan_data <- list(

trials = n_trials,

agents = n_agents,

h = sim_data,

prior_mean_theta = 0, # Using neutral priors

prior_sd_theta = 1,

prior_scale_theta_sd = 0.3

)

# Compile the model once

mod_sensitivity <- cmdstan_model("stan/sensitivity_model.stan",

cpp_options = list(stan_threads = TRUE),

stanc_options = list("O1"))

# Fit model

fit <- mod_sensitivity$sample(

data = stan_data,

seed = 123,

chains = 1,

parallel_chains = 1,

threads_per_chain = 1,

iter_warmup = 1000,

iter_sampling = 1000,

refresh = 0

)

# Extract estimates

summary_df <- fit$summary(c("thetaM", "thetaSD"))

# Return comparison of true vs. estimated parameters

return(tibble(

true_thetaM = true_thetaM,

true_thetaSD = true_thetaSD,

n_agents = n_agents,

n_trials = n_trials,

est_thetaM = summary_df$mean[summary_df$variable == "thetaM"],

est_thetaSD = summary_df$mean[summary_df$variable == "thetaSD"]

))

}

# Create parameter grid for recovery study

recovery_settings <- expand_grid(

true_thetaM = c(-1, 0, 1),

true_thetaSD = c(0.1, 0.3, 0.5, 0.7),

n_agents = c(20, 50),

n_trials = c(60, 120)

)

# Run parameter recovery study (this can be very time-consuming)

recovery_results <- pmap_dfr(

recovery_settings,

function(true_thetaM, true_thetaSD, n_agents, n_trials) {

recover_parameters(true_thetaM, true_thetaSD, n_agents, n_trials)

}

)

# Save results for future use

write_csv(recovery_results, recovery_results_file)

cat("Generated new parameter recovery results and saved to", recovery_results_file, "\n")

} else {

# Load existing results

recovery_results <- read_csv(recovery_results_file)

cat("Loaded existing parameter recovery results from", recovery_results_file, "\n")

}## Loaded existing parameter recovery results from simdata/W6_recovery_results.csv# Visualize parameter recovery results

p1 <- ggplot(recovery_results,

aes(x = true_thetaM, y = est_thetaM,

color = factor(true_thetaSD))) +

geom_point(size = 3) +

geom_abline(intercept = 0, slope = 1, linetype = "dashed") +

facet_grid(n_agents ~ n_trials,

labeller = labeller(

n_agents = function(x) paste0("Agents: ", x),

n_trials = function(x) paste0("Trials: ", x)

)) +

labs(title = "Recovery of Population Mean Parameter",

x = "True Value",

y = "Estimated Value",

color = "True Population SD") +

theme_classic()

p2 <- ggplot(recovery_results,

aes(x = true_thetaSD, y = est_thetaSD,

color = factor(true_thetaM))) +

geom_point(size = 3) +

geom_abline(intercept = 0, slope = 1, linetype = "dashed") +

facet_grid(n_agents ~ n_trials,

labeller = labeller(

n_agents = function(x) paste0("Agents: ", x),

n_trials = function(x) paste0("Trials: ", x)

)) +

labs(title = "Recovery of Population SD Parameter",

x = "True Value",

y = "Estimated Value",

color = "True Population Mean") +

theme_classic()

# Display parameter recovery plots

p1 / p2

7.12 Multilevel Memory Agent Model

Now we’ll implement a more complex model for the memory agent. This model has two parameters per agent:

bias: baseline tendency to choose “right” (log-odds scale)beta: sensitivity to the memory of opponent’s past choices

Like the random agent model, we’ll use a multilevel structure where individual parameters come from population distributions. However, this model presents some additional challenges:

- We need to handle two parameters per agent

- We need to track and update memory states during the model

- The hierarchical structure is more complex

The hierarchical structure for this model is:

Population level:

μ_bias ~ Normal(0, 1), σ_bias ~ Normal⁺(0, 0.3)

μ_beta ~ Normal(0, 0.3), σ_beta ~ Normal⁺(0, 0.3)

Individual level:

bias_i ~ Normal(μ_bias, σ_bias)

beta_i ~ Normal(μ_beta, σ_beta)

Transformed variables:

- memory_it = updated based on opponent’s choices

Data level:

- y_it ~ Bernoulli(logit⁻¹(bias_i + beta_i * logit(memory_it)))

Let’s implement this model.

[MISSING: DAGS]

Code, compile and fit the model

# Stan model for multilevel memory agent with centered parameterization

stan_model <- "

// Multilevel Memory Agent Model (Centered Parameterization)

//

functions{

real normal_lb_rng(real mu, real sigma, real lb) {

real p = normal_cdf(lb | mu, sigma); // cdf for bounds

real u = uniform_rng(p, 1);

return (sigma * inv_Phi(u)) + mu; // inverse cdf for value

}

}

// The input data for the model

data {

int<lower = 1> trials; // Number of trials per agent

int<lower = 1> agents; // Number of agents

array[trials, agents] int h; // Memory agent choices

array[trials, agents] int other; // Opponent (random agent) choices

}

// Parameters to be estimated

parameters {

// Population-level parameters

real biasM; // Mean of baseline bias

real<lower = 0> biasSD; // SD of baseline bias

real betaM; // Mean of memory sensitivity

real<lower = 0> betaSD; // SD of memory sensitivity

// Individual-level parameters

array[agents] real bias; // Individual baseline bias parameters

array[agents] real beta; // Individual memory sensitivity parameters

}

// Transformed parameters (derived quantities)

transformed parameters {

// Memory state for each agent and trial

array[trials, agents] real memory;

// Calculate memory states based on opponent's choices

for (agent in 1:agents){

// Initial memory state (no prior information)

memory[1, agent] = 0.5;

for (trial in 1:trials){

// Update memory based on opponent's choices

if (trial < trials){

// Simple averaging memory update

memory[trial + 1, agent] = memory[trial, agent] +

((other[trial, agent] - memory[trial, agent]) / trial);

// Handle edge cases to avoid numerical issues

if (memory[trial + 1, agent] == 0){memory[trial + 1, agent] = 0.01;}

if (memory[trial + 1, agent] == 1){memory[trial + 1, agent] = 0.99;}

}

}

}

}

// Model definition

model {

// Population-level priors

target += normal_lpdf(biasM | 0, 1);

target += normal_lpdf(biasSD | 0, .3) - normal_lccdf(0 | 0, .3); // Half-normal

target += normal_lpdf(betaM | 0, .3);

target += normal_lpdf(betaSD | 0, .3) - normal_lccdf(0 | 0, .3); // Half-normal

// Individual-level priors

target += normal_lpdf(bias | biasM, biasSD);

target += normal_lpdf(beta | betaM, betaSD);

// Likelihood

for (agent in 1:agents) {

for (trial in 1:trials) {

target += bernoulli_logit_lpmf(h[trial,agent] |

bias[agent] + logit(memory[trial, agent]) * beta[agent]);

}

}

}

// Generated quantities for model checking and predictions

generated quantities{

// Prior samples for checking

real biasM_prior;

real<lower=0> biasSD_prior;

real betaM_prior;

real<lower=0> betaSD_prior;

real bias_prior;

real beta_prior;

// Predictive simulations with different memory values

int<lower=0, upper = trials> prior_preds0; // No memory effect (memory=0)

int<lower=0, upper = trials> prior_preds1; // Neutral memory (memory=0.5)

int<lower=0, upper = trials> prior_preds2; // Strong memory (memory=1)

int<lower=0, upper = trials> posterior_preds0;

int<lower=0, upper = trials> posterior_preds1;

int<lower=0, upper = trials> posterior_preds2;

// Individual-level predictions (for each agent)

array[agents] int<lower=0, upper = trials> posterior_predsID0;

array[agents] int<lower=0, upper = trials> posterior_predsID1;

array[agents] int<lower=0, upper = trials> posterior_predsID2;

// Generate prior samples

biasM_prior = normal_rng(0,1);

biasSD_prior = normal_lb_rng(0,0.3,0);

betaM_prior = normal_rng(0,1);

betaSD_prior = normal_lb_rng(0,0.3,0);

bias_prior = normal_rng(biasM_prior, biasSD_prior);

beta_prior = normal_rng(betaM_prior, betaSD_prior);

// Prior predictive checks with different memory values

prior_preds0 = binomial_rng(trials, inv_logit(bias_prior + 0 * beta_prior));

prior_preds1 = binomial_rng(trials, inv_logit(bias_prior + 1 * beta_prior));

prior_preds2 = binomial_rng(trials, inv_logit(bias_prior + 2 * beta_prior));

// Posterior predictive checks with different memory values

posterior_preds0 = binomial_rng(trials, inv_logit(biasM + 0 * betaM));

posterior_preds1 = binomial_rng(trials, inv_logit(biasM + 1 * betaM));

posterior_preds2 = binomial_rng(trials, inv_logit(biasM + 2 * betaM));

// Individual-level predictions

for (agent in 1:agents){

posterior_predsID0[agent] = binomial_rng(trials,

inv_logit(bias[agent] + 0 * beta[agent]));

posterior_predsID1[agent] = binomial_rng(trials,

inv_logit(bias[agent] + 1 * beta[agent]));

posterior_predsID2[agent] = binomial_rng(trials,

inv_logit(bias[agent] + 2 * beta[agent]));

}

}

"

write_stan_file(

stan_model,

dir = "stan/",

basename = "W6_MultilevelMemory.stan")

# File path for saved model

model_file <- "simmodels/W6_MultilevelMemory_centered.RDS"

# Check if we need to rerun the simulation

if (regenerate_simulations || !file.exists(model_file)) {

file <- file.path("stan/W6_MultilevelMemory.stan")

mod <- cmdstan_model(file,

cpp_options = list(stan_threads = TRUE),

stanc_options = list("O1"))

# Sample from the posterior distribution

samples_mlvl <- mod$sample(

data = data_memory,

seed = 123,

chains = 2,

parallel_chains = 2,

threads_per_chain = 1,

iter_warmup = 2000,

iter_sampling = 2000,

refresh = 500,

max_treedepth = 20,

adapt_delta = 0.99

)

# Save the model results

samples_mlvl$save_object(file = model_file)

cat("Generated new model fit and saved to", model_file, "\n")

} else {

# Load existing results

samples_mlvl <- readRDS(model_file)

cat("Loaded existing model fit from", model_file, "\n")

}7.12.1 Assessing multilevel memory

# Check if samples_biased exists

if (!exists("samples_mlvl")) {

cat("Loading multilevel model samples...\n")

samples_mlvl <- readRDS("simmodels/W6_MultilevelMemory_centered.RDS")

cat("Available parameters:", paste(colnames(as_draws_df(samples_mlvl$draws())), collapse = ", "), "\n")

}

# Show summary statistics for key parameters

print(samples_mlvl$summary(c("biasM", "betaM", "biasSD", "betaSD")))## # A tibble: 4 × 10

## variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 biasM 0.416 0.414 0.0808 0.0800 0.285 0.552 1.01 241. 484.

## 2 betaM 1.16 1.16 0.0711 0.0727 1.04 1.27 1.00 347. 833.

## 3 biasSD 0.241 0.240 0.0611 0.0599 0.141 0.343 1.00 205. 242.

## 4 betaSD 0.381 0.379 0.0465 0.0457 0.308 0.461 1.00 1155. 1764.# Extract posterior draws for analysis

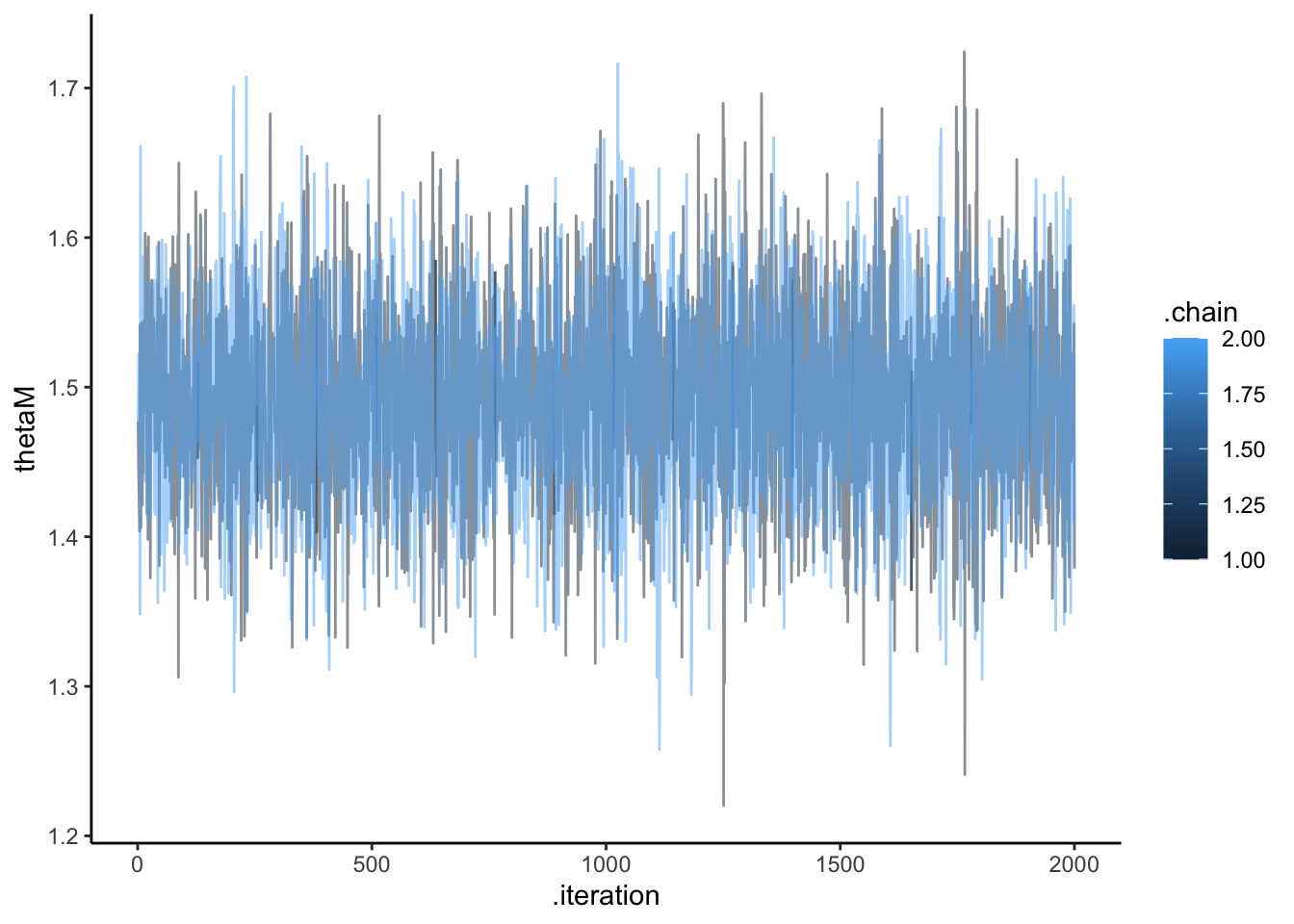

draws_df <- as_draws_df(samples_mlvl$draws())

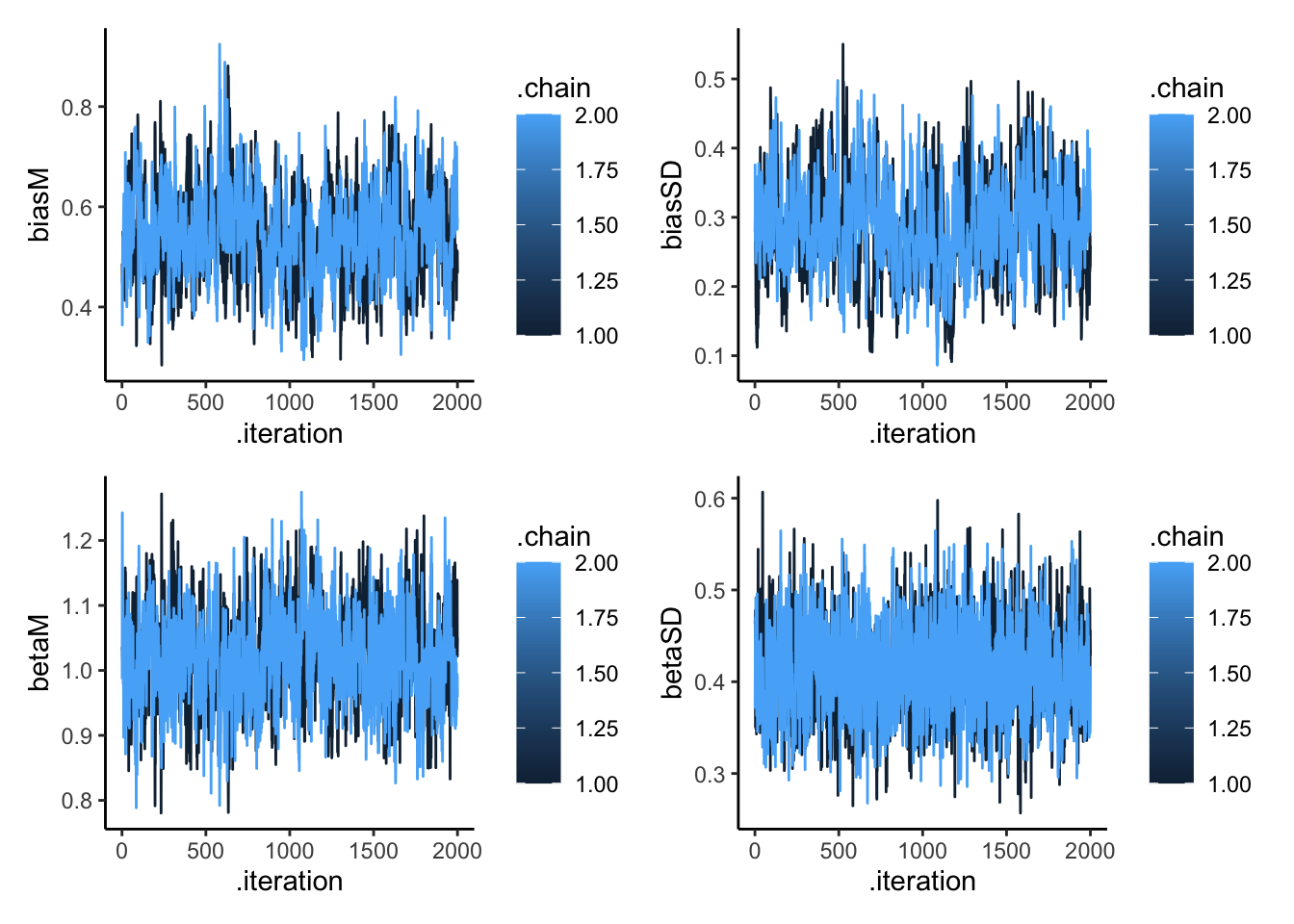

# Create trace plots to check convergence

p1 <- mcmc_trace(draws_df, pars = c("biasM", "biasSD", "betaM", "betaSD")) +

theme_classic() +

ggtitle("Trace Plots for Population Parameters")

# Show trace plots

p1

# Create prior-posterior update plots

create_density_plot <- function(param, true_value, title) {

prior_name <- paste0(param, "_prior")

ggplot(draws_df) +

geom_histogram(aes(get(param)), fill = "blue", alpha = 0.3) +

geom_histogram(aes(get(prior_name)), fill = "red", alpha = 0.3) +

geom_vline(xintercept = true_value, linetype = "dashed") +

labs(title = title,

subtitle = "Blue: posterior, Red: prior, Dashed: true value",

x = param, y = "Density") +

theme_classic()

}

# Create individual plots

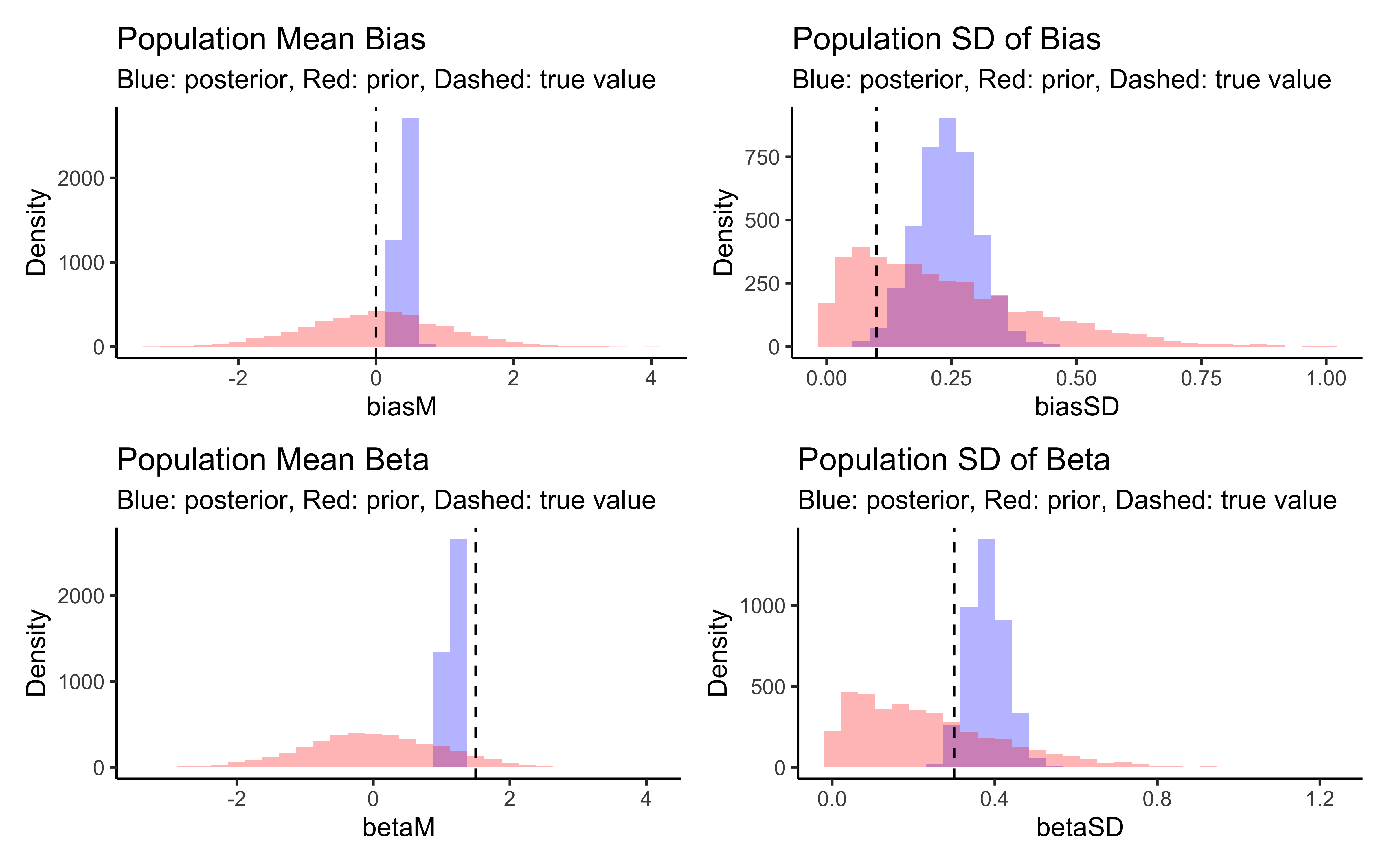

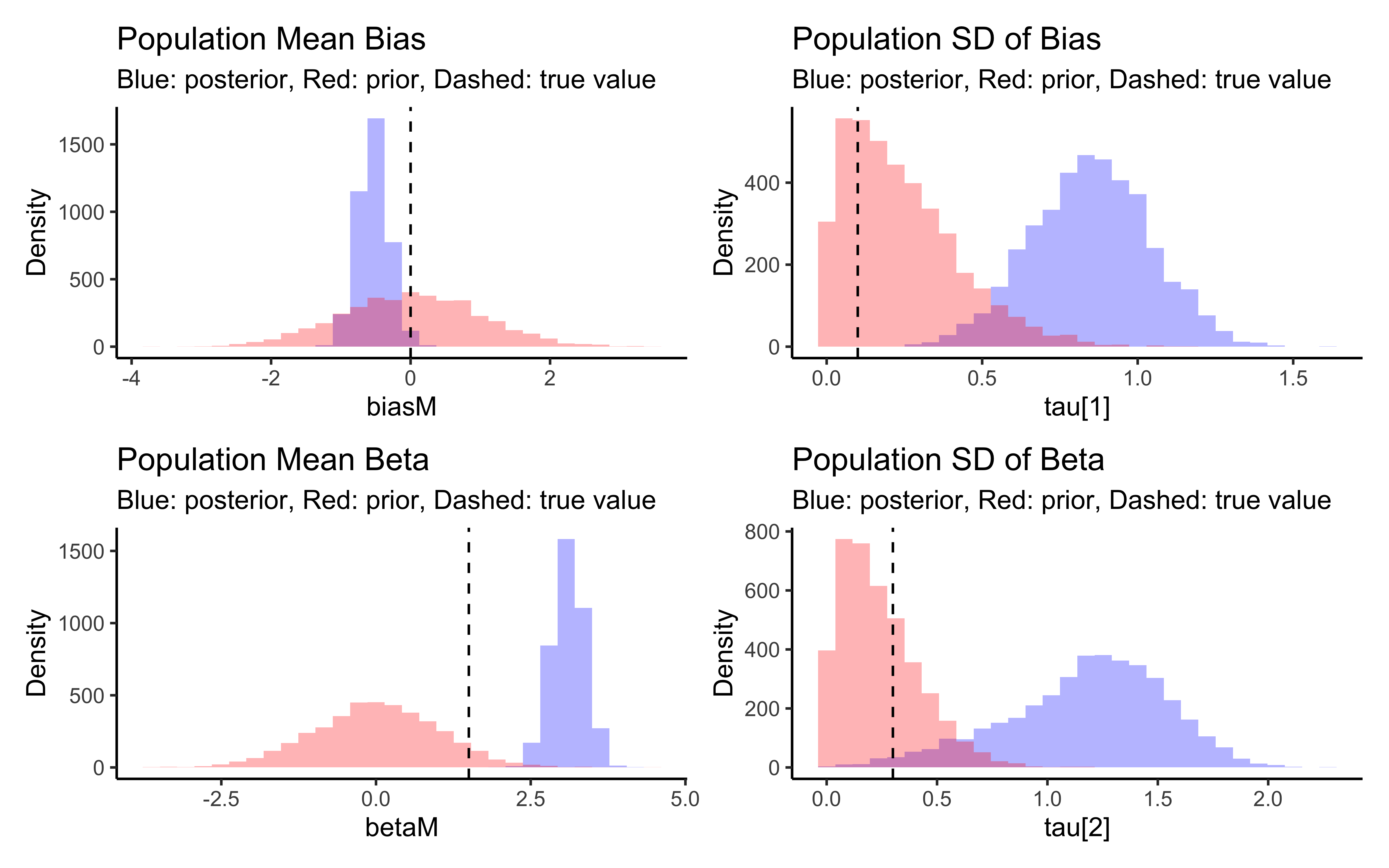

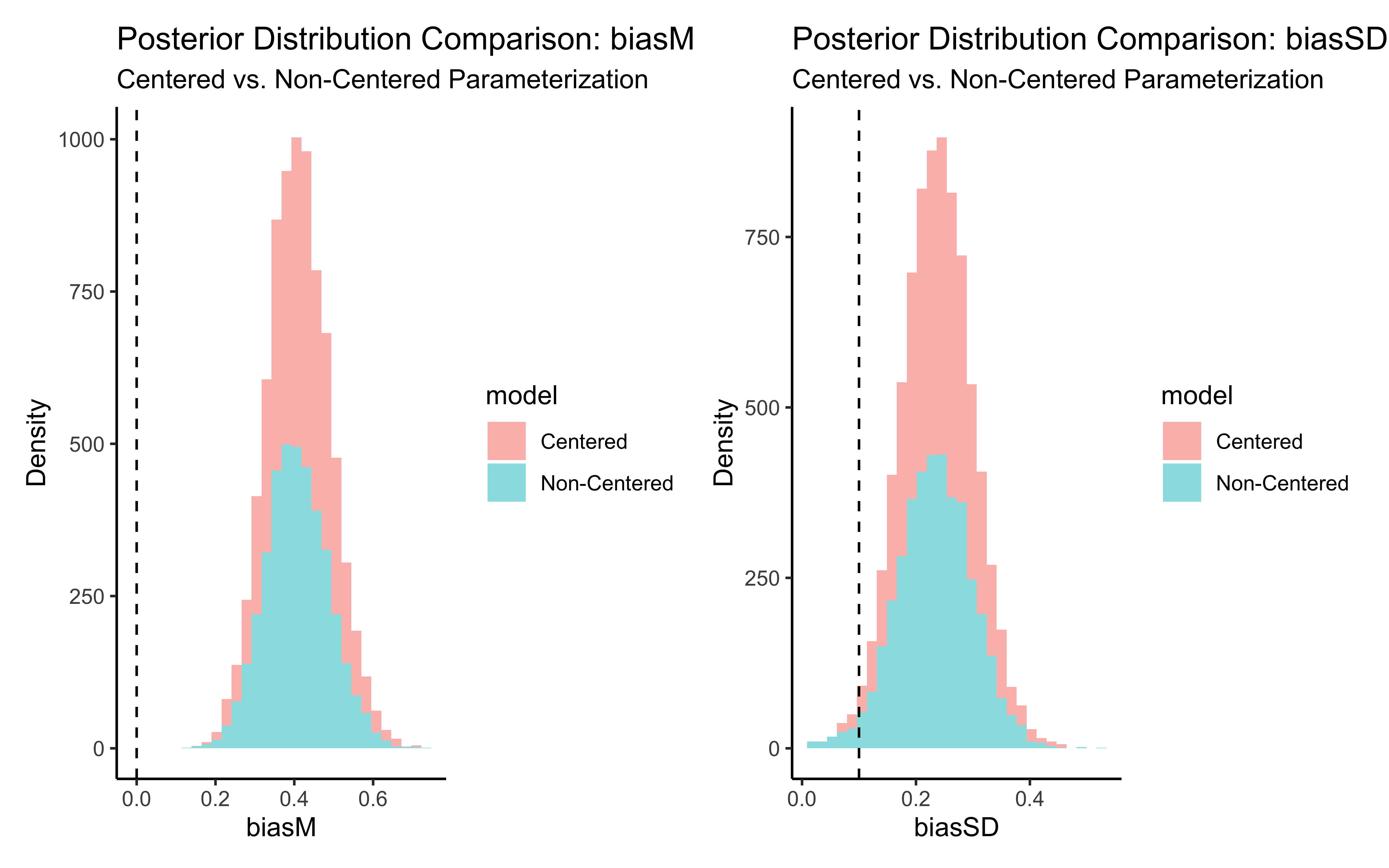

p_biasM <- create_density_plot("biasM", biasM, "Population Mean Bias")

p_biasSD <- create_density_plot("biasSD", biasSD, "Population SD of Bias")

p_betaM <- create_density_plot("betaM", betaM, "Population Mean Beta")

p_betaSD <- create_density_plot("betaSD", betaSD, "Population SD of Beta")

# Show them in a grid

(p_biasM + p_biasSD) / (p_betaM + p_betaSD)

# Show correlations

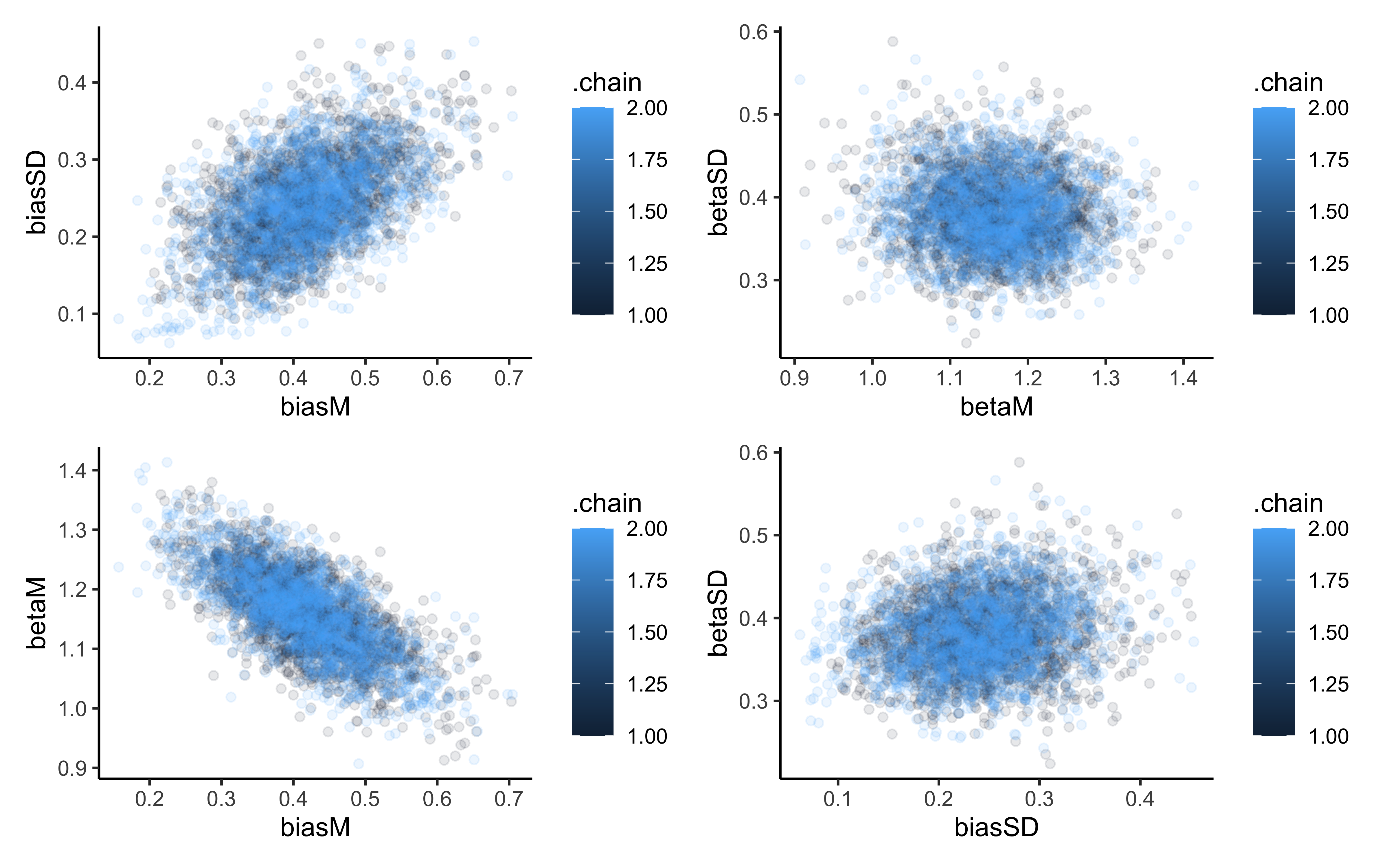

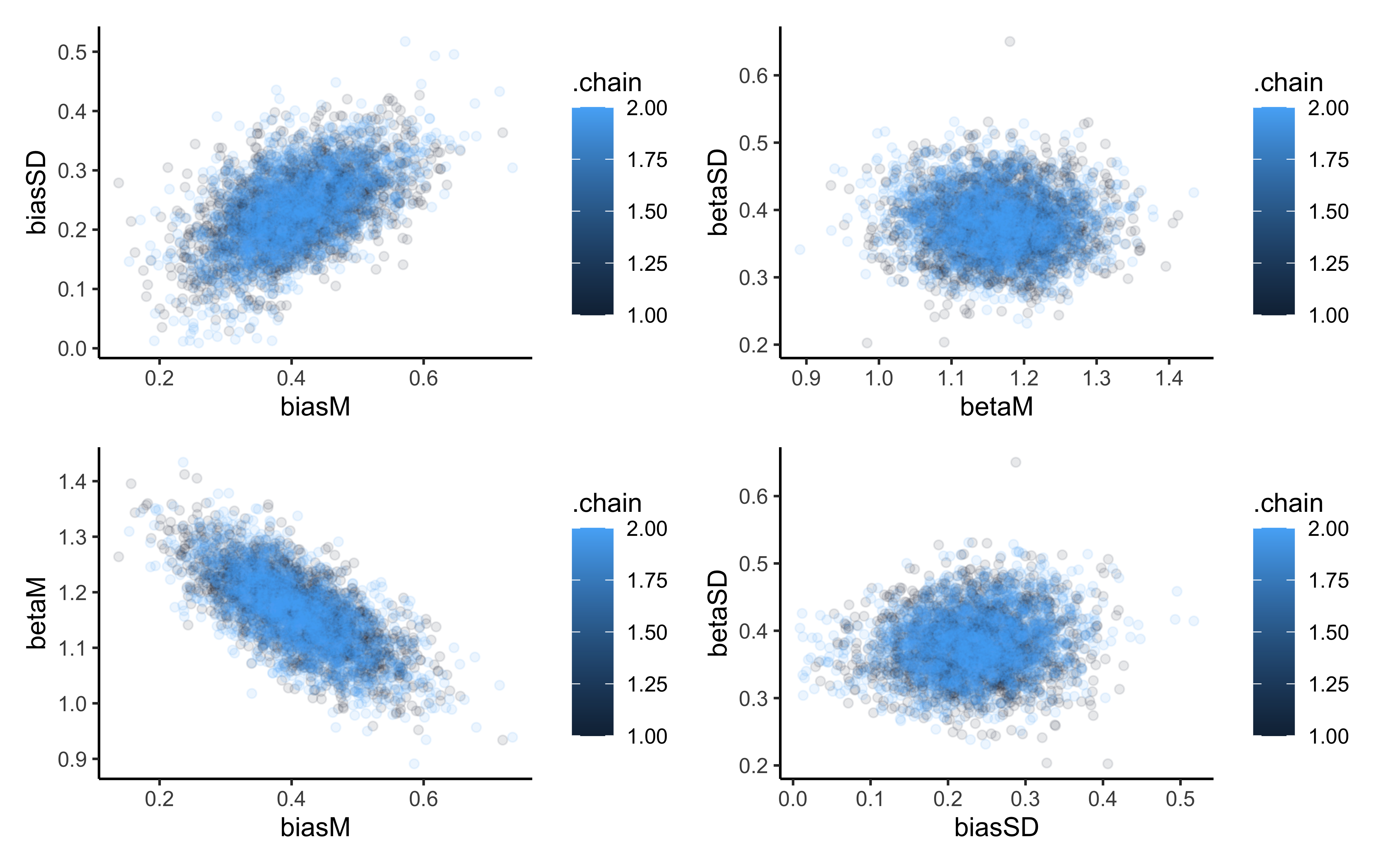

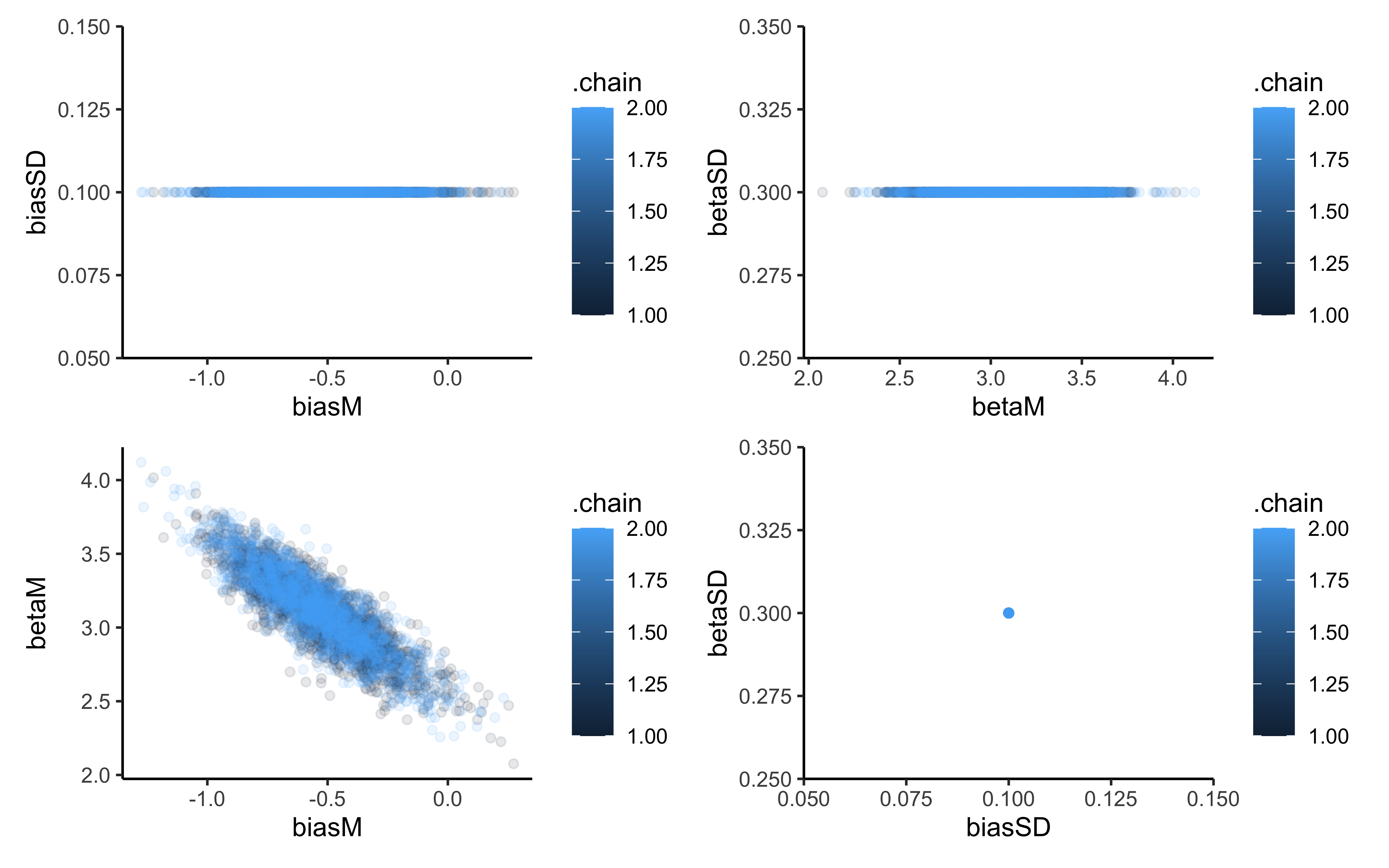

p1 <- ggplot(draws_df, aes(biasM, biasSD, group = .chain, color = .chain)) +

geom_point(alpha = 0.1) +

theme_classic()

p2 <- ggplot(draws_df, aes(betaM, betaSD, group = .chain, color = .chain)) +

geom_point(alpha = 0.1) +

theme_classic()

p3 <- ggplot(draws_df, aes(biasM, betaM, group = .chain, color = .chain)) +

geom_point(alpha = 0.1) +

theme_classic()

p4 <- ggplot(draws_df, aes(biasSD, betaSD, group = .chain, color = .chain)) +

geom_point(alpha = 0.1) +

theme_classic()

p1 + p2 + p3 + p4

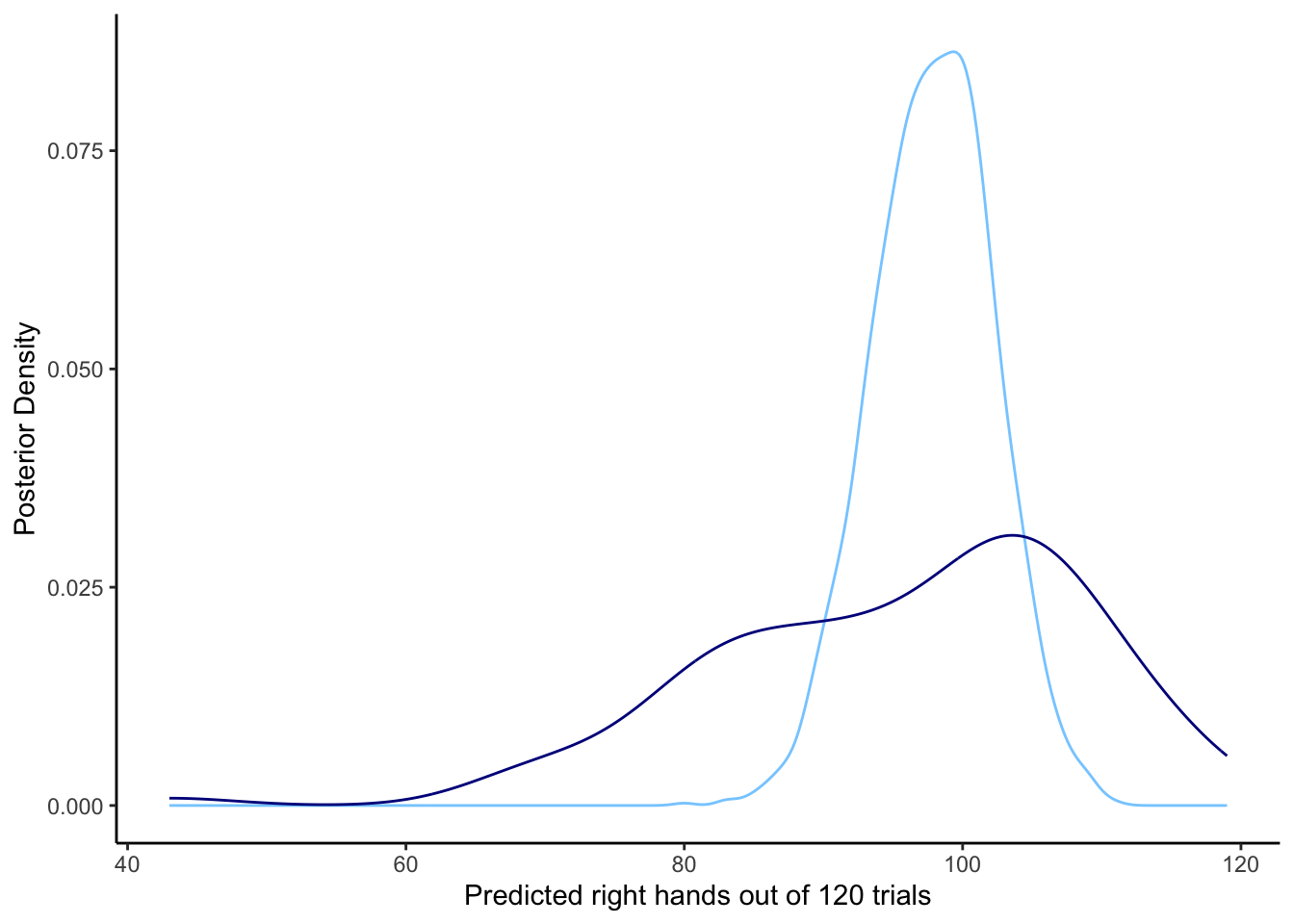

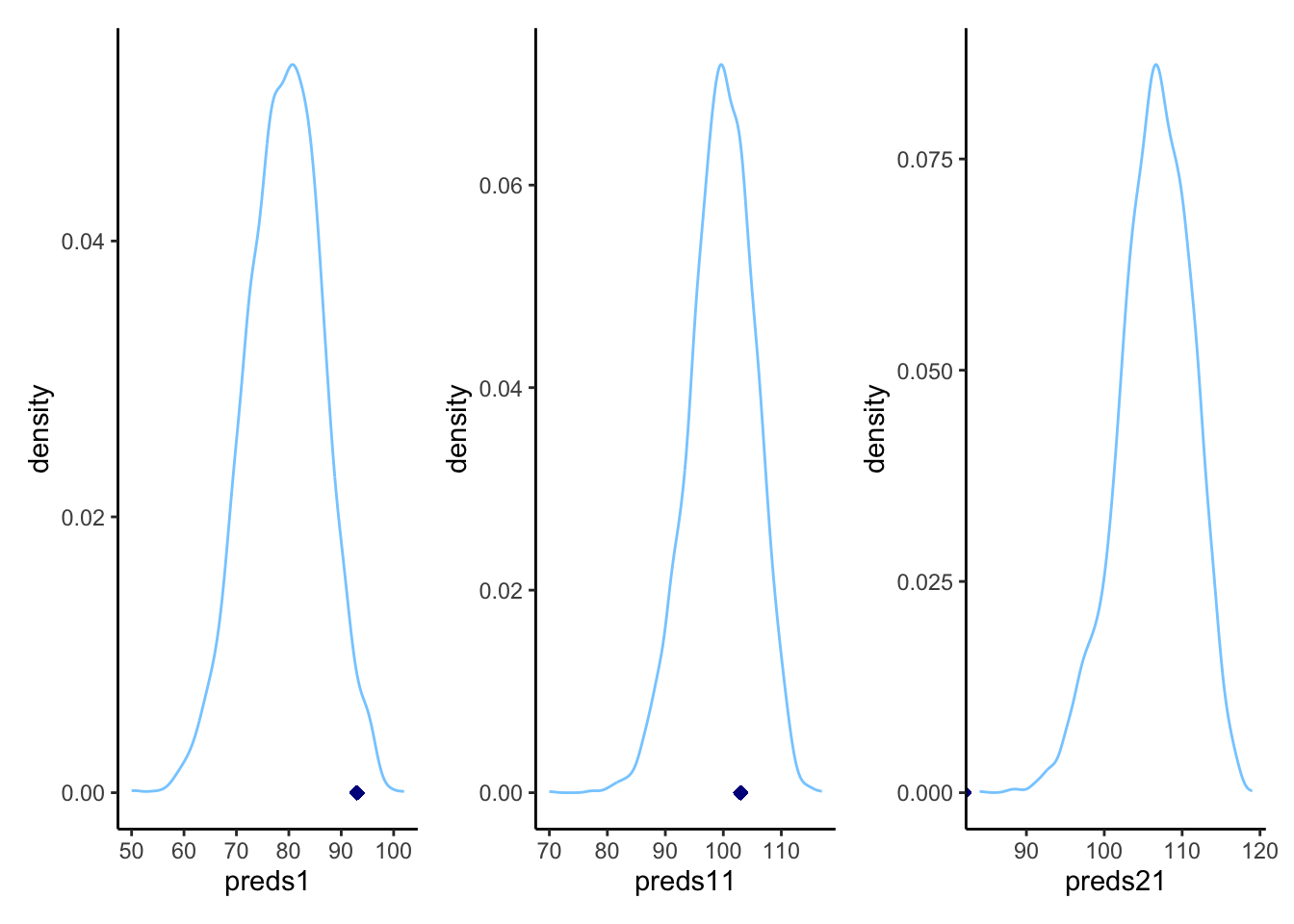

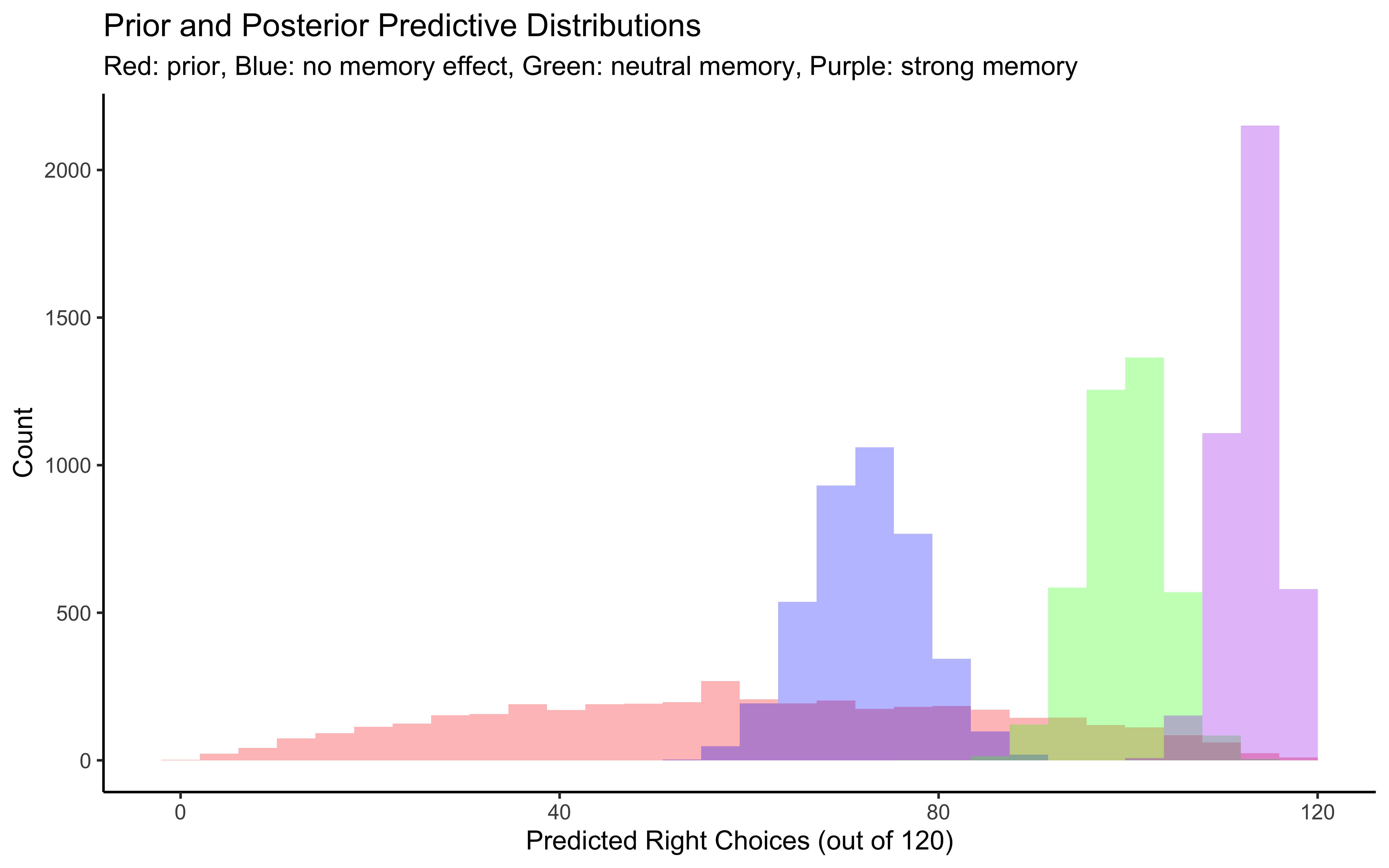

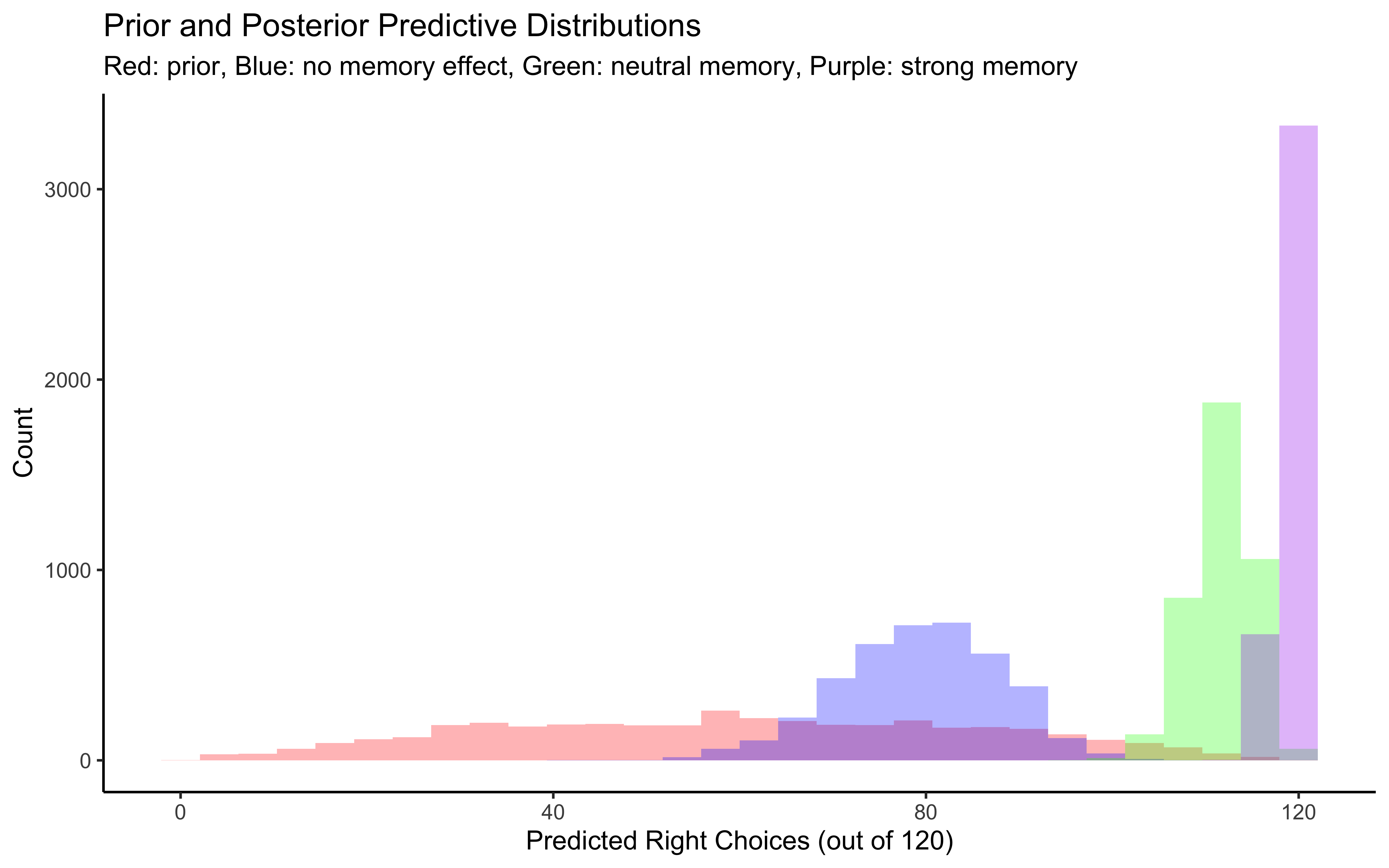

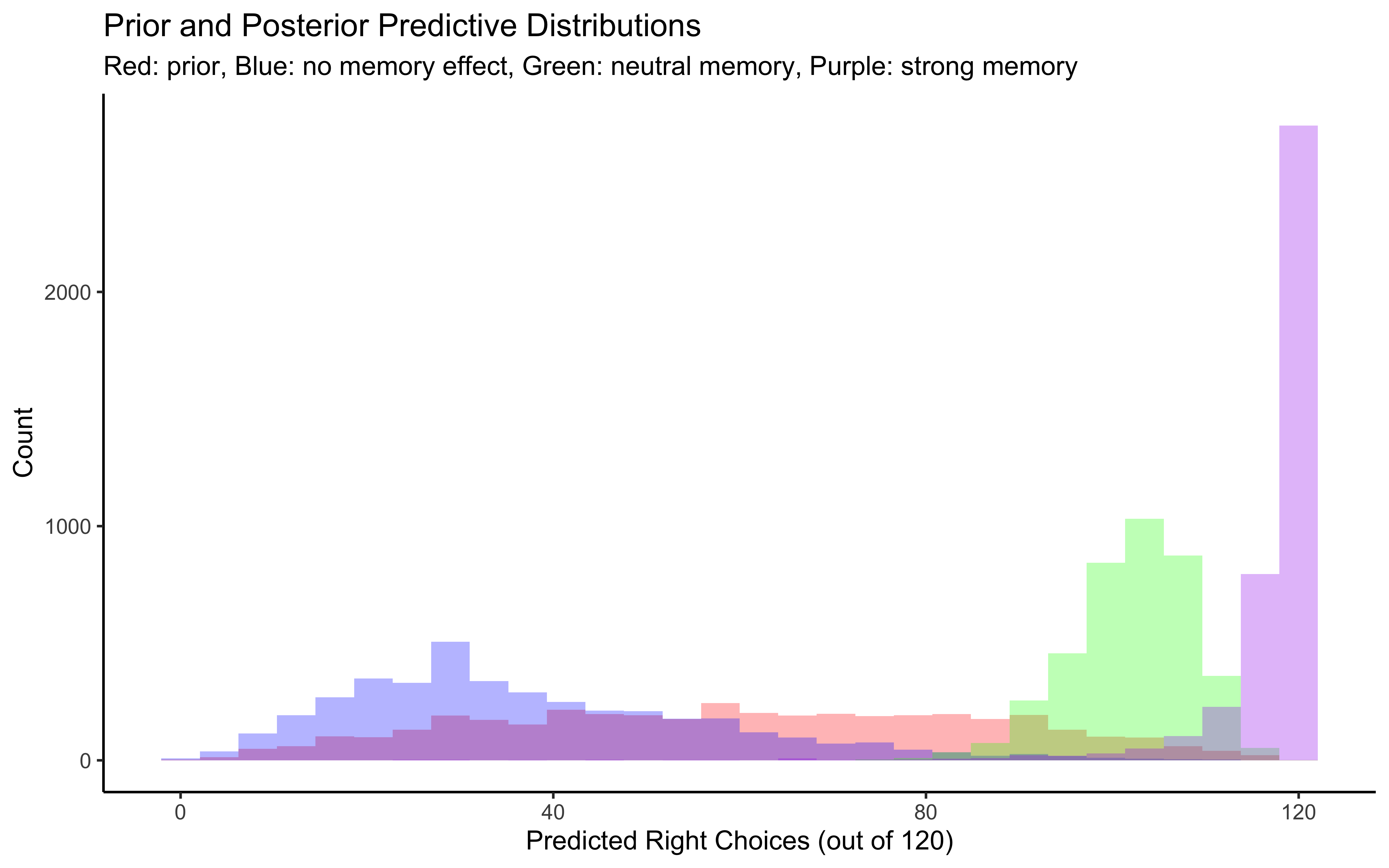

# Create posterior predictive check plots

p1 <- ggplot(draws_df) +

geom_histogram(aes(prior_preds0), fill = "red", alpha = 0.3, bins = 30) +

geom_histogram(aes(posterior_preds0), fill = "blue", alpha = 0.3, bins = 30) +

geom_histogram(aes(posterior_preds1), fill = "green", alpha = 0.3, bins = 30) +

geom_histogram(aes(posterior_preds2), fill = "purple", alpha = 0.3, bins = 30) +

labs(title = "Prior and Posterior Predictive Distributions",

subtitle = "Red: prior, Blue: no memory effect, Green: neutral memory, Purple: strong memory",

x = "Predicted Right Choices (out of 120)",

y = "Count") +

theme_classic()

# Display plots

p1

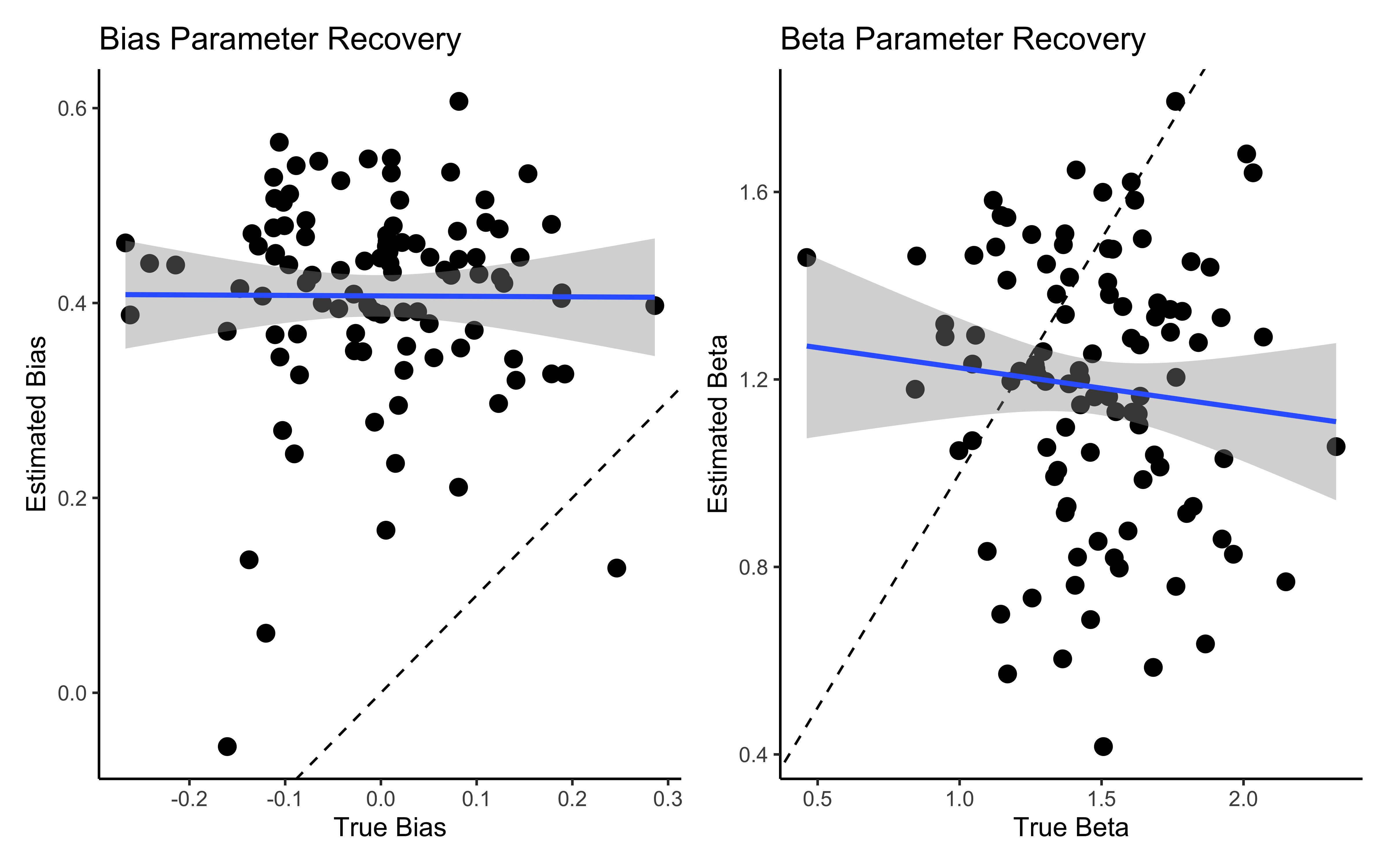

# Individual-level parameter recovery

# Extract individual parameters for a sample of agents

sample_agents <- sample(1:agents, 100)

sample_data <- d %>%

filter(agent %in% sample_agents, trial == 1) %>%

dplyr::select(agent, bias, beta)

# Extract posterior means for individual agents

bias_means <- c()

beta_means <- c()

for (i in sample_agents) {

bias_means[i] <- mean(draws_df[[paste0("bias[", i, "]")]])

beta_means[i] <- mean(draws_df[[paste0("beta[", i, "]")]])

}

# Create comparison data

comparison_data <- tibble(

agent = sample_agents,

true_bias = sample_data$bias,

est_bias = bias_means[sample_agents],

true_beta = sample_data$beta,

est_beta = beta_means[sample_agents]

)

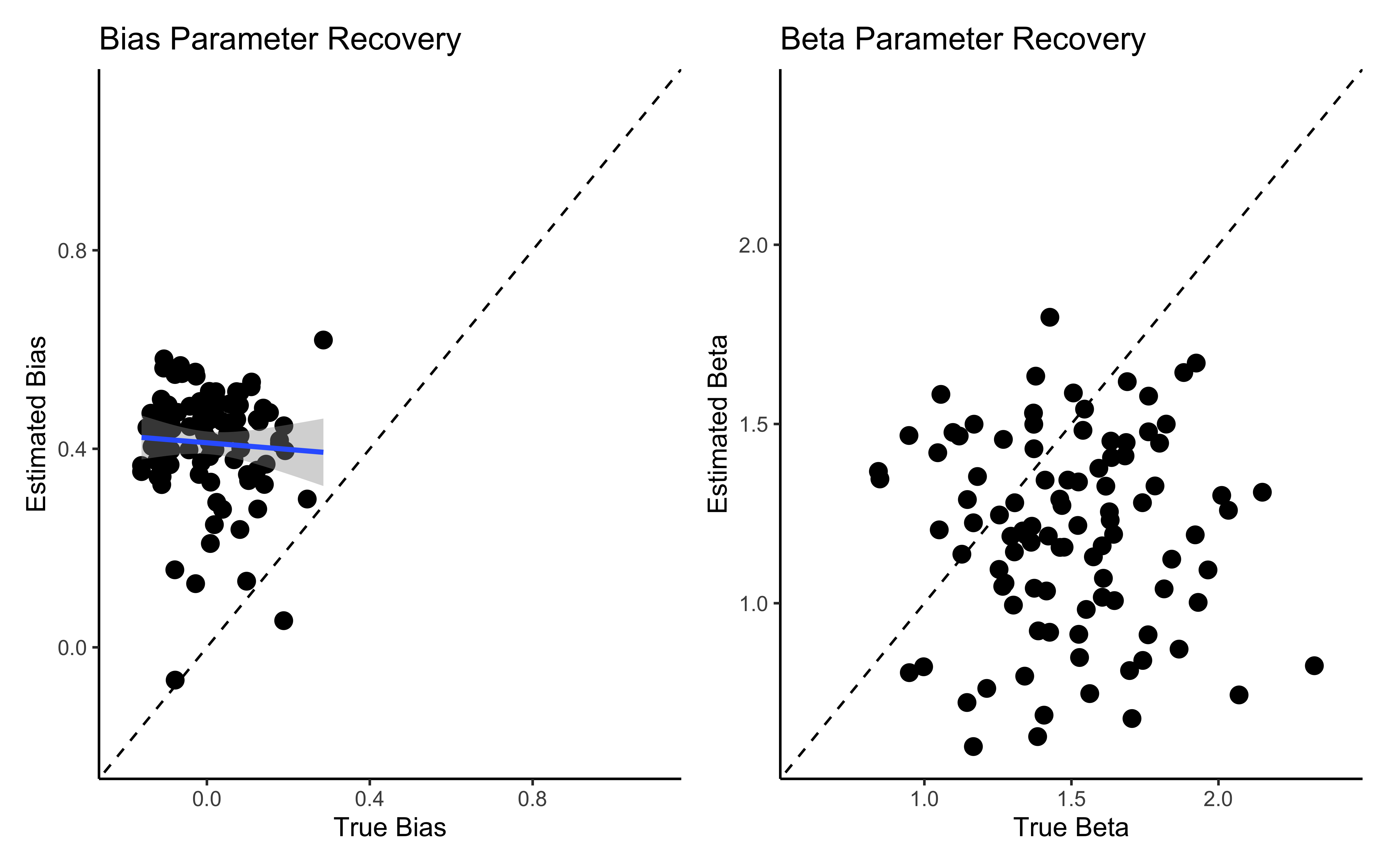

# Plot comparison

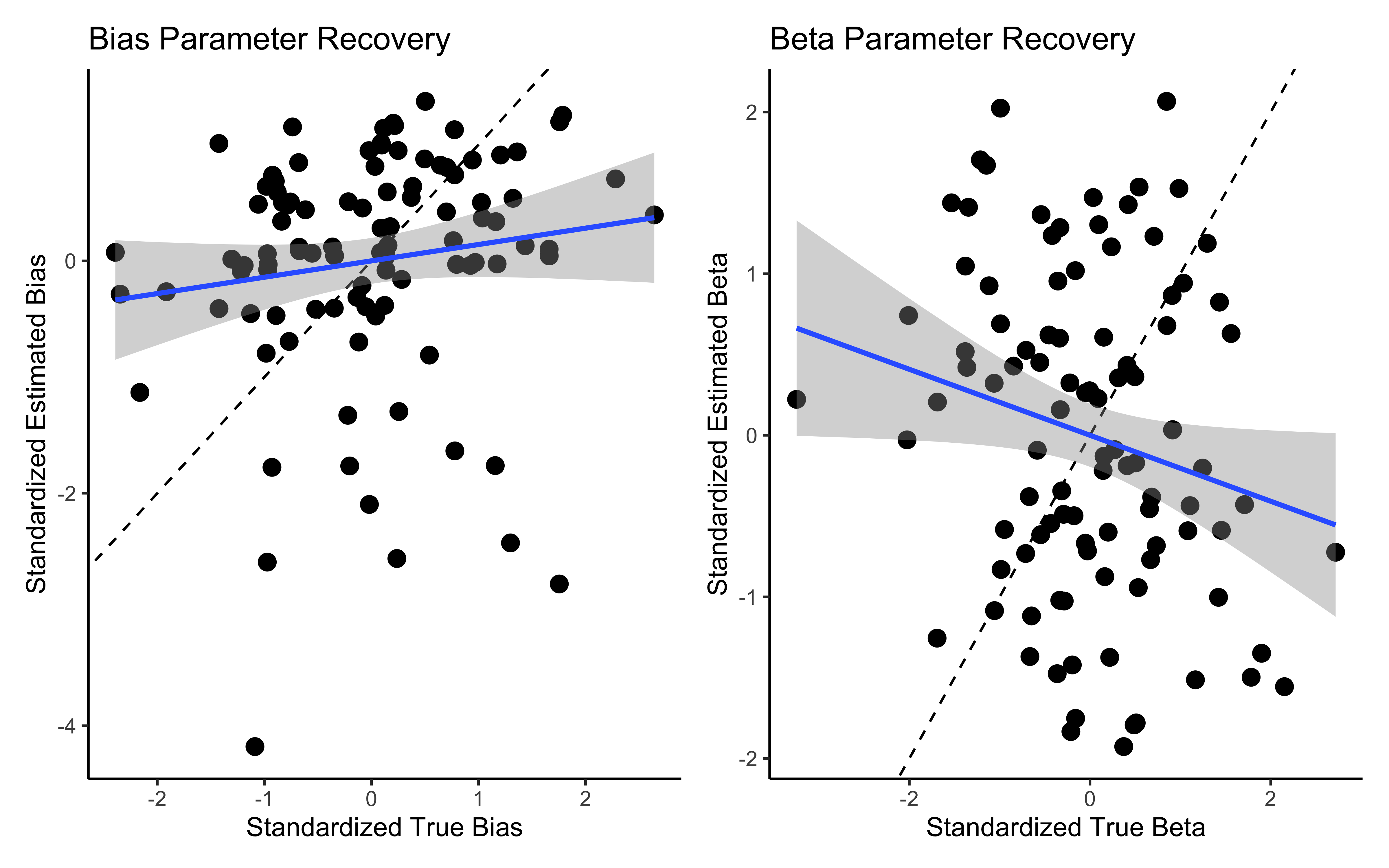

p1 <- ggplot(comparison_data, aes(true_bias, est_bias)) +

geom_point(size = 3) +

geom_abline(intercept = 0, slope = 1, linetype = "dashed") +

geom_smooth(method = lm) +

ylim(-0.2, 1.1) +

xlim(-0.2, 1.1) +

labs(title = "Bias Parameter Recovery",

x = "True Bias",

y = "Estimated Bias") +

theme_classic()

p2 <- ggplot(comparison_data, aes(true_beta, est_beta)) +

geom_point(size = 3) +

geom_abline(intercept = 0, slope = 1, linetype = "dashed") +

ylim(0.6, 2.4) +

xlim(0.6, 2.4) +

labs(title = "Beta Parameter Recovery",

x = "True Beta",

y = "Estimated Beta") +

theme_classic()

# Display parameter recovery plots

p1 + p2

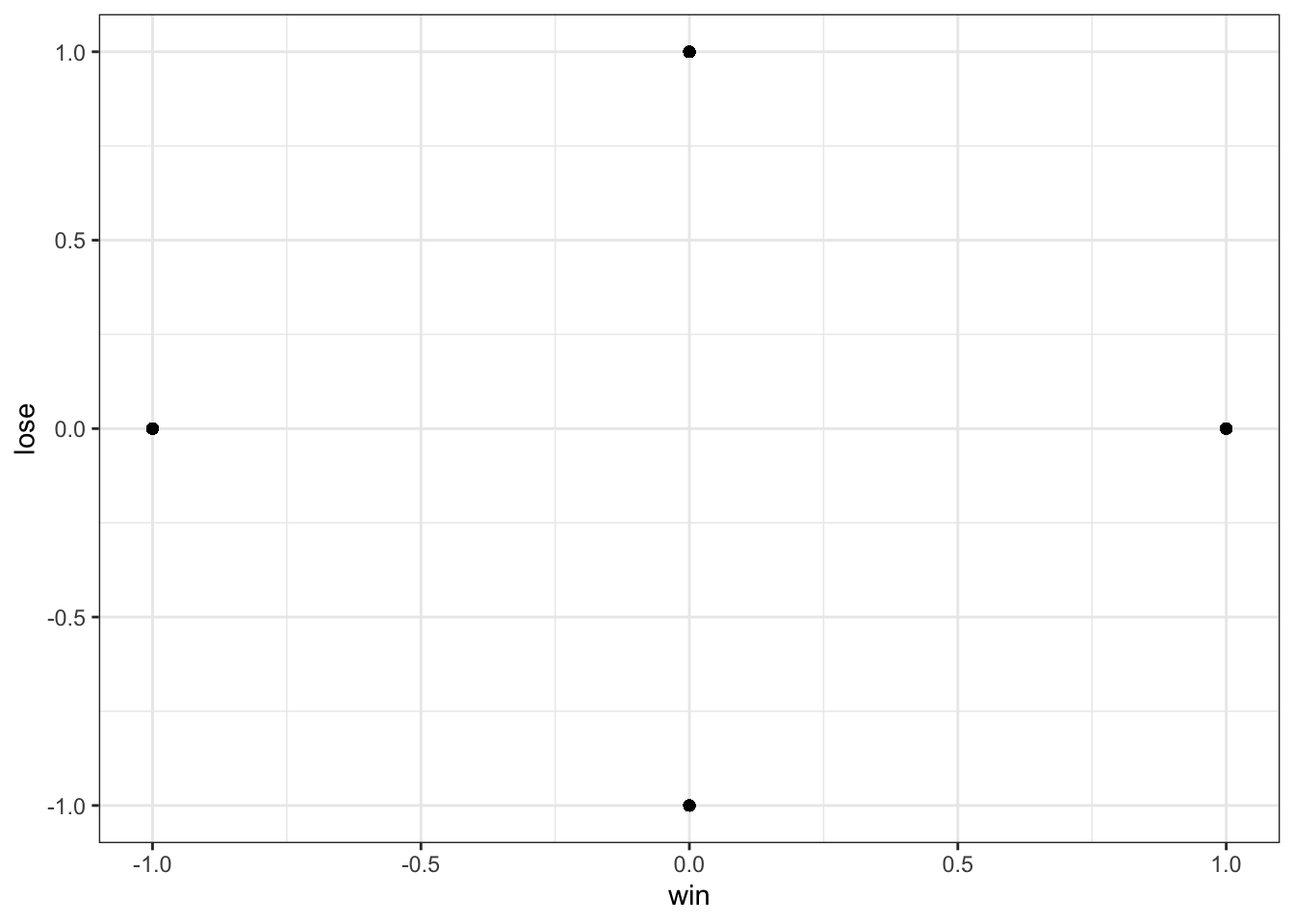

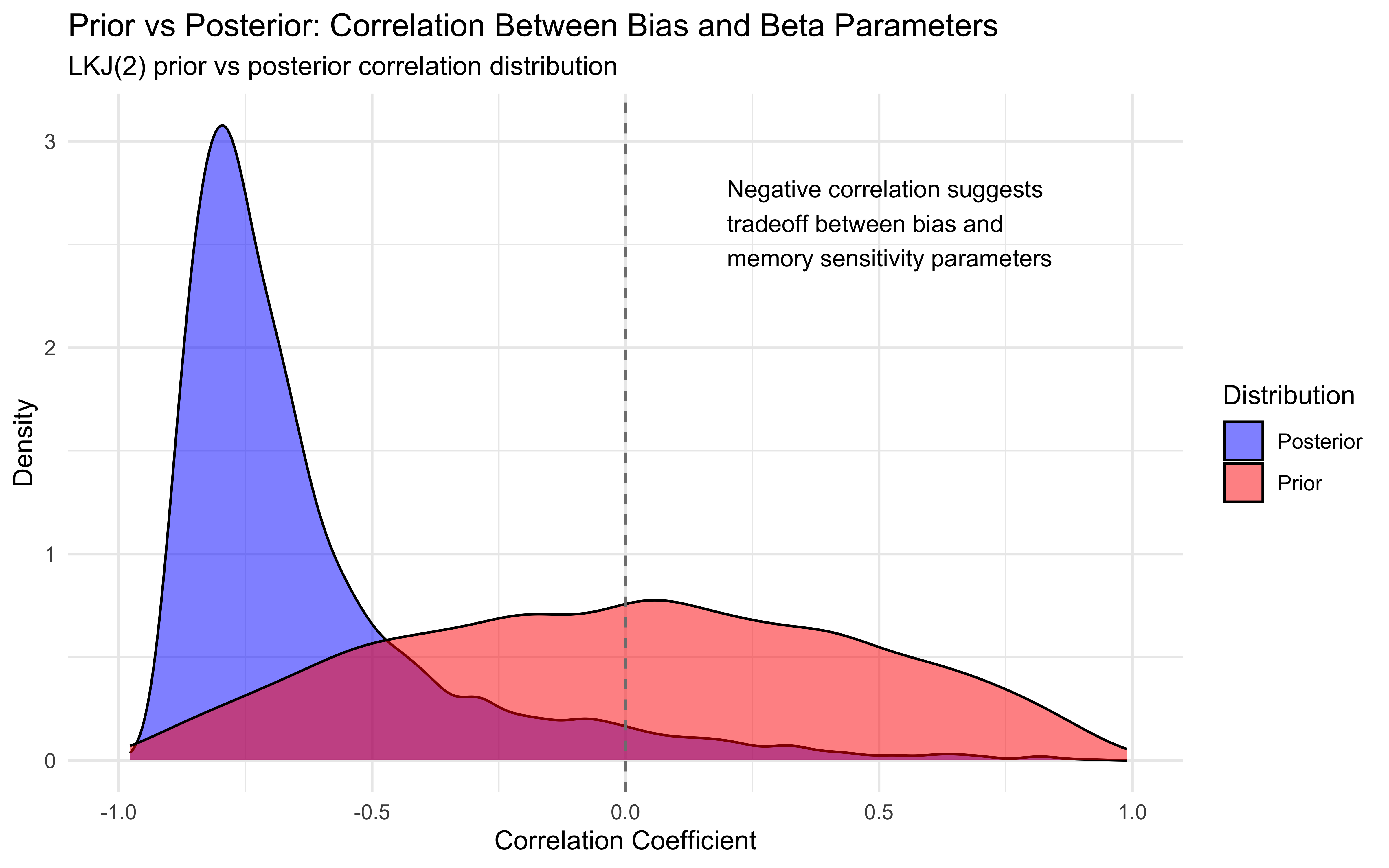

7.12.2 Diagnosing the issue with bias and beta

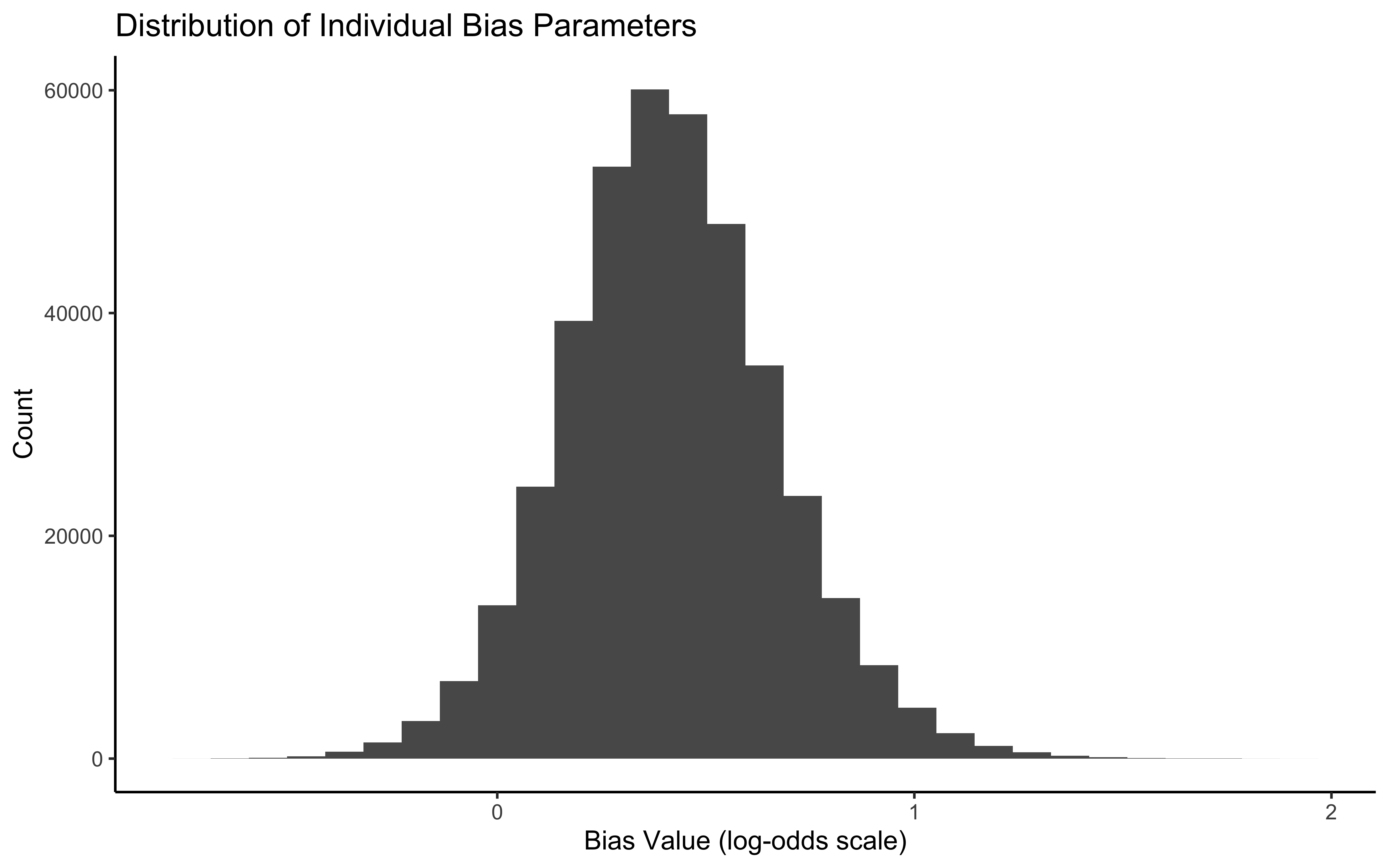

# First, let's examine the individual bias parameters distribution

bias_params <- draws_df %>%

dplyr::select(starts_with("bias[")) %>%

pivot_longer(everything(), names_to = "parameter", values_to = "value")

# Plot distribution of all individual bias parameters

ggplot(bias_params, aes(x = value)) +

geom_histogram(bins = 30) +

labs(title = "Distribution of Individual Bias Parameters",

x = "Bias Value (log-odds scale)",

y = "Count") +

theme_classic()

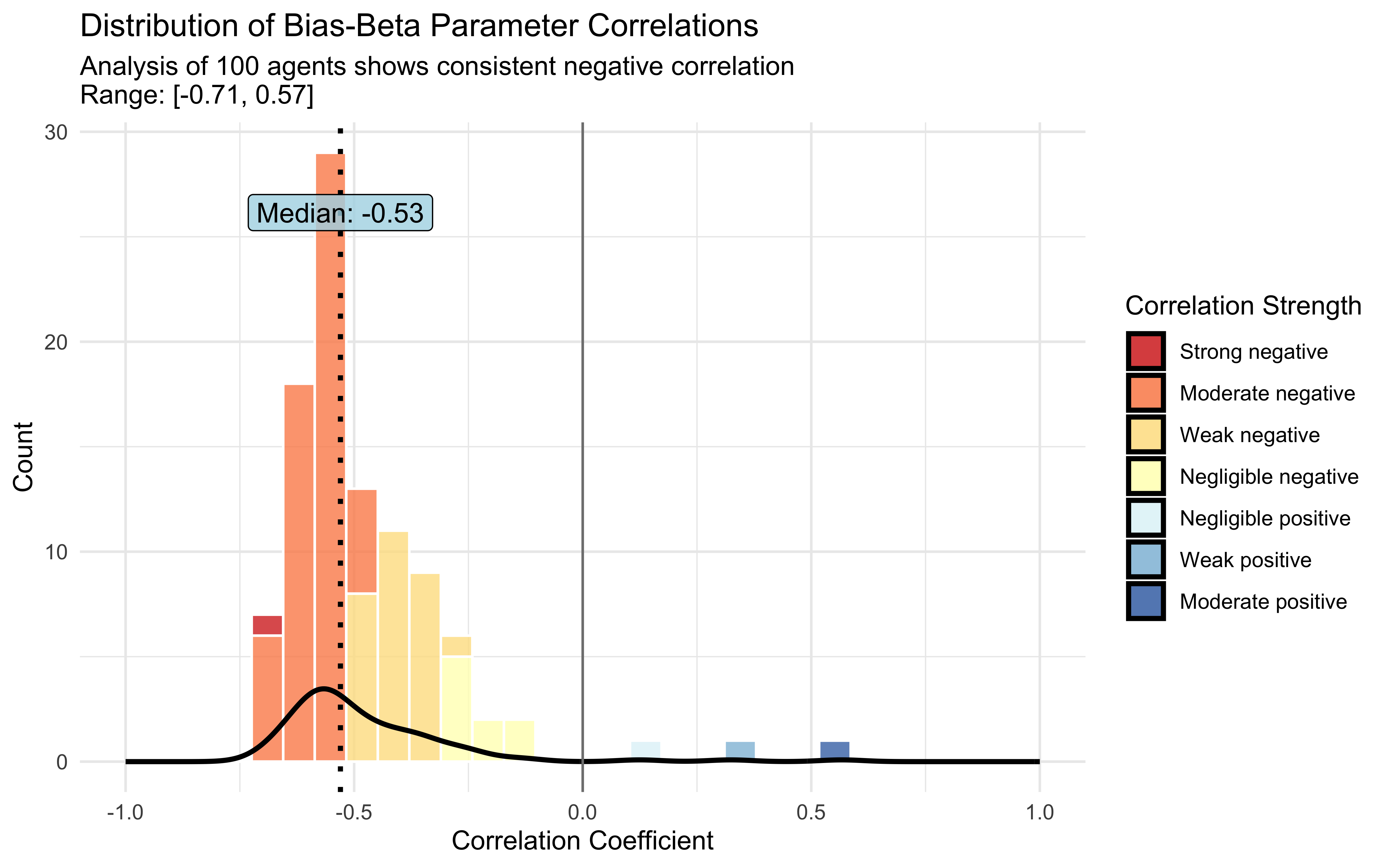

# Check for correlation between bias and beta parameters

# For a few sample agents

cors <- NULL

for (i in sample(1:agents)) {

cors[i] <- (cor(draws_df[[paste0("bias[", i, "]")]],

draws_df[[paste0("beta[", i, "]")]]))

}

corr_data <- tibble(

agent = seq_along(cors),

correlation = cors

) %>%

filter(!is.na(correlation))

# Calculate summary statistics

mean_cor <- mean(corr_data$correlation)

median_cor <- median(corr_data$correlation)

min_cor <- min(corr_data$correlation)

max_cor <- max(corr_data$correlation)

# Create correlation strength categories

corr_data <- corr_data %>%

mutate(

strength = case_when(

correlation <= -0.7 ~ "Strong negative",

correlation <= -0.5 ~ "Moderate negative",

correlation <= -0.3 ~ "Weak negative",

correlation < 0 ~ "Negligible negative",

correlation >= 0 & correlation < 0.3 ~ "Negligible positive",

correlation >= 0.3 & correlation < 0.5 ~ "Weak positive",

correlation >= 0.5 & correlation < 0.7 ~ "Moderate positive",

TRUE ~ "Strong positive"

),

strength = factor(strength, levels = c(

"Strong negative", "Moderate negative", "Weak negative",

"Negligible negative", "Negligible positive",

"Weak positive", "Moderate positive", "Strong positive"

))

)

# Create the plot

ggplot(corr_data, aes(x = correlation)) +

# Histogram colored by correlation strength

geom_histogram(aes(fill = strength), bins = 30, alpha = 0.8, color = "white") +

# Density curve

geom_density(color = "black", linewidth = 1, alpha = 0.3) +

# Reference lines

geom_vline(xintercept = median_cor, linewidth = 1, linetype = "dotted", color = "black") +

geom_vline(xintercept = 0, linewidth = 0.5, color = "gray50") +

# Annotations

annotate("label", x = median_cor, y = Inf, label = paste("Median:", round(median_cor, 2)),

vjust = 3, size = 4, fill = "lightblue", alpha = 0.8) +

# Colors for correlation strength

scale_fill_manual(values = c(

"Strong negative" = "#d73027",

"Moderate negative" = "#fc8d59",

"Weak negative" = "#fee090",

"Negligible negative" = "#ffffbf",

"Negligible positive" = "#e0f3f8",

"Weak positive" = "#91bfdb",

"Moderate positive" = "#4575b4",

"Strong positive" = "#313695"

)) +

# Titles and labels

labs(

title = "Distribution of Bias-Beta Parameter Correlations",

subtitle = paste0(

"Analysis of ", nrow(corr_data), " agents shows consistent negative correlation\n",

"Range: [", round(min_cor, 2), ", ", round(max_cor, 2), "]"

),

x = "Correlation Coefficient",

y = "Count",

fill = "Correlation Strength"

) +

# Set axis limits and theme

xlim(-1, 1) +

theme_minimal()

7.12.3 Multilevel memory with non centered parameterization

When implementing multilevel models, we sometimes encounter sampling efficiency issues, especially when group-level variance parameters are small or data is limited. This creates a “funnel” in the posterior distribution that’s difficult for the sampler to navigate efficiently.

Non-centered parameterization addresses this by reparameterizing individual parameters as standardized deviations from the group mean:

Instead of: θᵢ ~ Normal(μ, σ) We use: θᵢ = μ + σ · zᵢ, where zᵢ ~ Normal(0, 1)

This is conceptually similar to when we z-score variables in regression models.

This approach separates the sampling of the standardized individual parameters (zᵢ) from the group-level parameters (μ and σ), improving sampling efficiency. The transformation between these parameterizations is invertible, so the models are equivalent, but the non-centered version often performs better computationally.

In our code, we implement this by:

Sampling standardized individual parameters (biasID_z, betaID_z)

Multiplying by group SD and adding group mean to get individual parameters

# Stan model for multilevel memory agent with non-centered parameterization

stan_model_nc <- "

// Multilevel Memory Agent Model (Non-Centered Parameterization)

//

functions{

real normal_lb_rng(real mu, real sigma, real lb) {

real p = normal_cdf(lb | mu, sigma); // cdf for bounds

real u = uniform_rng(p, 1);

return (sigma * inv_Phi(u)) + mu; // inverse cdf for value

}

}

// The input data for the model

data {

int<lower = 1> trials; // Number of trials per agent

int<lower = 1> agents; // Number of agents

array[trials, agents] int h; // Memory agent choices

array[trials, agents] int other; // Opponent (random agent) choices

}

// Parameters to be estimated

parameters {

// Population-level parameters

real biasM; // Mean of baseline bias

real<lower = 0> biasSD; // SD of baseline bias

real betaM; // Mean of memory sensitivity

real<lower = 0> betaSD; // SD of memory sensitivity

// Standardized individual parameters (non-centered parameterization)

vector[agents] biasID_z; // Standardized individual bias parameters

vector[agents] betaID_z; // Standardized individual beta parameters

}

// Transformed parameters (derived quantities)

transformed parameters {

// Memory state for each agent and trial

array[trials, agents] real memory;

// Individual parameters (constructed from non-centered parameterization)

vector[agents] biasID;

vector[agents] betaID;

// Calculate memory states based on opponent's choices

for (agent in 1:agents){

for (trial in 1:trials){

// Initial memory state (no prior information)

if (trial == 1) {

memory[trial, agent] = 0.5;

}

// Update memory based on opponent's choices

if (trial < trials){

// Simple averaging memory update

memory[trial + 1, agent] = memory[trial, agent] +

((other[trial, agent] - memory[trial, agent]) / trial);

// Handle edge cases to avoid numerical issues

if (memory[trial + 1, agent] == 0){memory[trial + 1, agent] = 0.01;}

if (memory[trial + 1, agent] == 1){memory[trial + 1, agent] = 0.99;}

}

}

}

// Construct individual parameters from non-centered parameterization

biasID = biasM + biasID_z * biasSD;

betaID = betaM + betaID_z * betaSD;

}

// Model definition

model {

// Population-level priors

target += normal_lpdf(biasM | 0, 1);

target += normal_lpdf(biasSD | 0, .3) - normal_lccdf(0 | 0, .3); // Half-normal

target += normal_lpdf(betaM | 0, .3);

target += normal_lpdf(betaSD | 0, .3) - normal_lccdf(0 | 0, .3); // Half-normal

// Standardized individual parameters (non-centered parameterization)

target += std_normal_lpdf(biasID_z); // Standard normal prior for z-scores

target += std_normal_lpdf(betaID_z); // Standard normal prior for z-scores

// Likelihood

for (agent in 1:agents){

for (trial in 1:trials){

target += bernoulli_logit_lpmf(h[trial,agent] |

biasID[agent] + logit(memory[trial, agent]) * betaID[agent]);

}

}

}

// Generated quantities for model checking and predictions

generated quantities{

// Prior samples for checking

real biasM_prior;

real<lower=0> biasSD_prior;

real betaM_prior;

real<lower=0> betaSD_prior;

real bias_prior;

real beta_prior;

// Predictive simulations with different memory values (individual level)

array[agents] int<lower=0, upper = trials> prior_preds0; // No memory effect (memory=0)

array[agents] int<lower=0, upper = trials> prior_preds1; // Neutral memory (memory=0.5)

array[agents] int<lower=0, upper = trials> prior_preds2; // Strong memory (memory=1)

array[agents] int<lower=0, upper = trials> posterior_preds0;

array[agents] int<lower=0, upper = trials> posterior_preds1;

array[agents] int<lower=0, upper = trials> posterior_preds2;

// Generate prior samples

biasM_prior = normal_rng(0,1);

biasSD_prior = normal_lb_rng(0,0.3,0);

betaM_prior = normal_rng(0,1);

betaSD_prior = normal_lb_rng(0,0.3,0);

bias_prior = normal_rng(biasM_prior, biasSD_prior);

beta_prior = normal_rng(betaM_prior, betaSD_prior);

// Generate predictions for each agent

for (agent in 1:agents){

// Prior predictive checks

prior_preds0[agent] = binomial_rng(trials, inv_logit(bias_prior + 0 * beta_prior));

prior_preds1[agent] = binomial_rng(trials, inv_logit(bias_prior + 1 * beta_prior));

prior_preds2[agent] = binomial_rng(trials, inv_logit(bias_prior + 2 * beta_prior));

// Posterior predictive checks

posterior_preds0[agent] = binomial_rng(trials,

inv_logit(biasM + biasID[agent] + 0 * (betaM + betaID[agent])));

posterior_preds1[agent] = binomial_rng(trials,

inv_logit(biasM + biasID[agent] + 1 * (betaM + betaID[agent])));

posterior_preds2[agent] = binomial_rng(trials,

inv_logit(biasM + biasID[agent] + 2 * (betaM + betaID[agent])));

}

}

"

# Write the Stan model to a file

write_stan_file(

stan_model_nc,

dir = "stan/",

basename = "W6_MultilevelMemory_nc.stan"

)

# File path for saved model

model_file <- "simmodels/W6_MultilevelMemory_noncentered.RDS"

# Check if we need to rerun the simulation

if (regenerate_simulations || !file.exists(model_file)) {

# Compile the model

file <- file.path("stan/W6_MultilevelMemory_nc.stan")

mod_nc <- cmdstan_model(file,

cpp_options = list(stan_threads = TRUE),

stanc_options = list("O1"))

# Sample from the posterior distribution

samples_mlvl_nc <- mod_nc$sample(

data = data_memory,

seed = 123,

chains = 2,

parallel_chains = 2,

threads_per_chain = 1,

iter_warmup = 2000,

iter_sampling = 2000,

refresh = 500,

max_treedepth = 20,

adapt_delta = 0.99

)

# Save the model results

samples_mlvl_nc$save_object(file = model_file)

cat("Generated new model fit and saved to", model_file, "\n")

} else {

# Load existing results

samples_mlvl_nc <- readRDS(model_file)

cat("Loaded existing model fit from", model_file, "\n")

}7.12.4 Assessing multilevel memory

# Check if samples_biased exists

if (!exists("samples_mlvl_nc")) {

cat("Loading multilevel non centered model samples...\n")

samples_mlvl_nc <- readRDS("simmodels/W6_MultilevelMemory_noncentered.RDS")

cat("Available parameters:", paste(colnames(as_draws_df(samples_mlvl_nc$draws())), collapse=", "), "\n")

}

# Show summary statistics for key parameters

print(samples_mlvl_nc$summary(c("biasM", "betaM", "biasSD", "betaSD")))## # A tibble: 4 × 10

## variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 biasM 0.407 0.403 0.0800 0.0802 0.280 0.542 1.00 1343. 1892.

## 2 betaM 1.16 1.17 0.0695 0.0694 1.05 1.28 1.00 1431. 2057.

## 3 biasSD 0.233 0.233 0.0660 0.0646 0.127 0.338 1.00 723. 830.

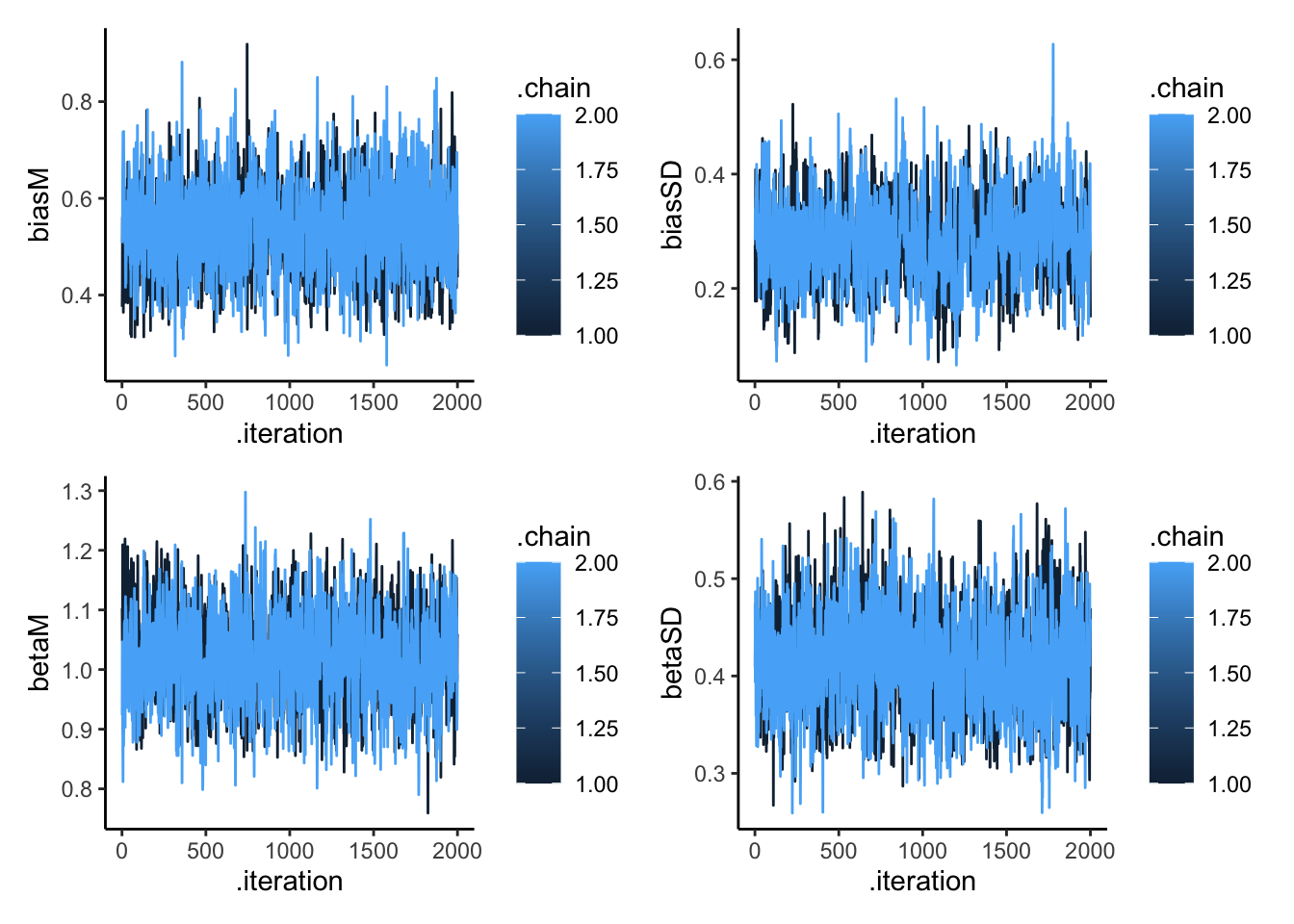

## 4 betaSD 0.379 0.378 0.0468 0.0457 0.306 0.460 1.00 1798. 2762.# Extract posterior draws for analysis

draws_df <- as_draws_df(samples_mlvl_nc$draws())

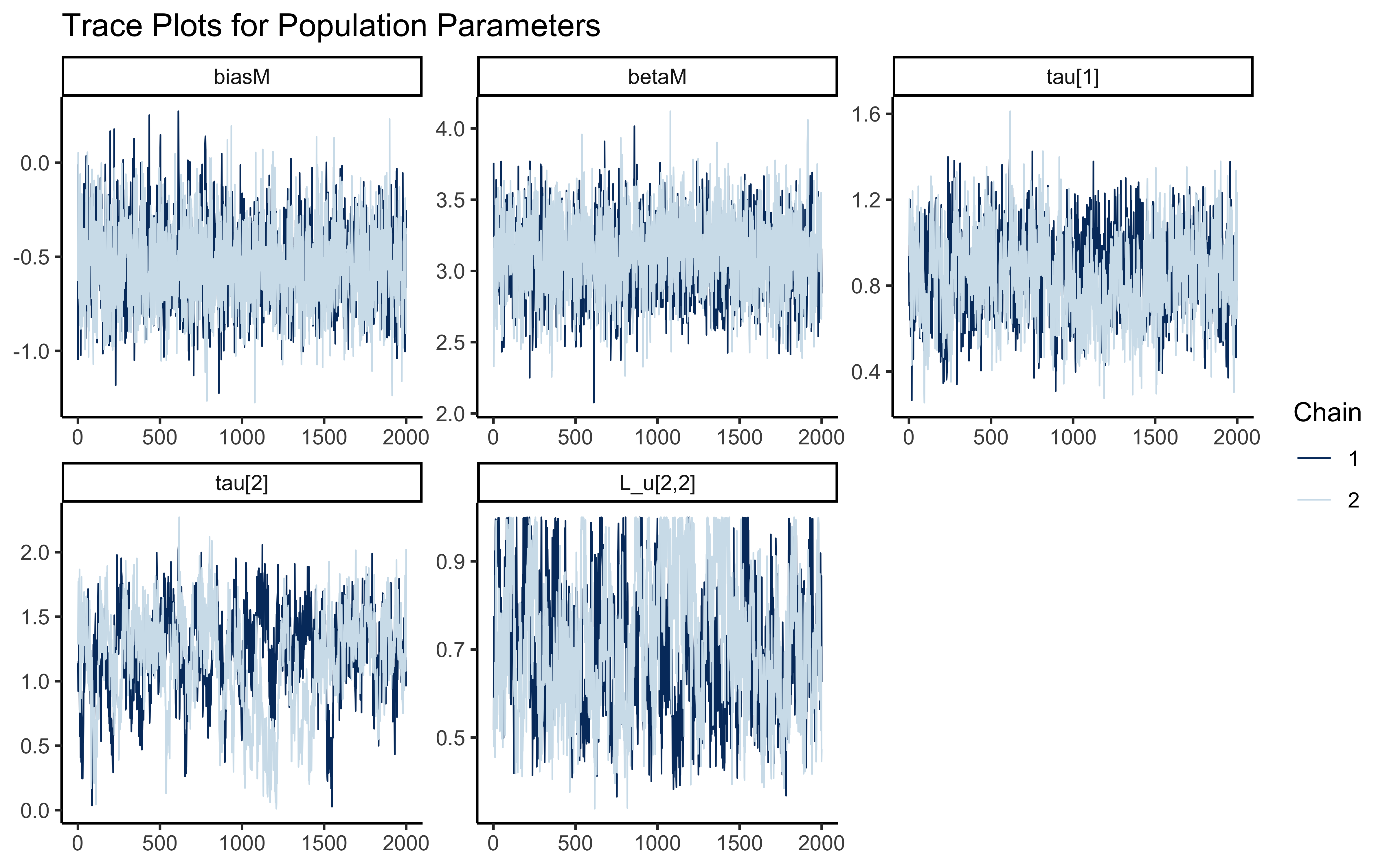

# Create trace plots to check convergence

p1 <- mcmc_trace(draws_df, pars = c("biasM", "biasSD", "betaM", "betaSD")) +

theme_classic() +

ggtitle("Trace Plots for Population Parameters")

# Show trace plots

p1

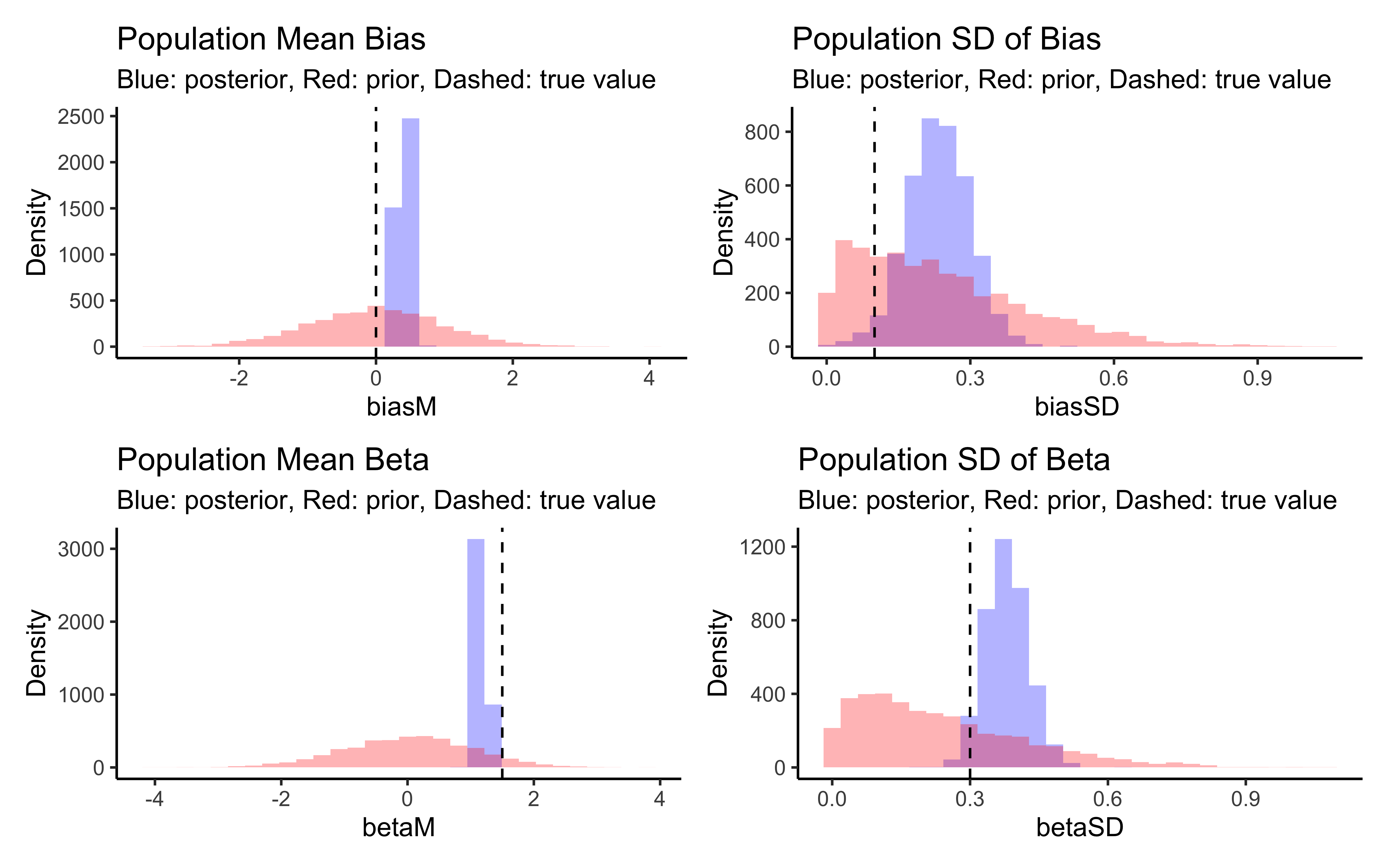

# Create prior-posterior update plots

create_density_plot <- function(param, true_value, title) {

prior_name <- paste0(param, "_prior")

ggplot(draws_df) +

geom_histogram(aes(get(param)), fill = "blue", alpha = 0.3) +

geom_histogram(aes(get(prior_name)), fill = "red", alpha = 0.3) +

geom_vline(xintercept = true_value, linetype = "dashed") +

labs(title = title,

subtitle = "Blue: posterior, Red: prior, Dashed: true value",

x = param, y = "Density") +

theme_classic()

}

# Create individual plots

p_biasM <- create_density_plot("biasM", biasM, "Population Mean Bias")

p_biasSD <- create_density_plot("biasSD", biasSD, "Population SD of Bias")

p_betaM <- create_density_plot("betaM", betaM, "Population Mean Beta")

p_betaSD <- create_density_plot("betaSD", betaSD, "Population SD of Beta")

# Show them in a grid

(p_biasM + p_biasSD) / (p_betaM + p_betaSD)

# Show correlations

p1 <- ggplot(draws_df, aes(biasM, biasSD, group = .chain, color = .chain)) +

geom_point(alpha = 0.1) +

theme_classic()

p2 <- ggplot(draws_df, aes(betaM, betaSD, group = .chain, color = .chain)) +

geom_point(alpha = 0.1) +

theme_classic()

p3 <- ggplot(draws_df, aes(biasM, betaM, group = .chain, color = .chain)) +

geom_point(alpha = 0.1) +

theme_classic()

p4 <- ggplot(draws_df, aes(biasSD, betaSD, group = .chain, color = .chain)) +

geom_point(alpha = 0.1) +

theme_classic()

p1 + p2 + p3 + p4

# Create posterior predictive check plots

p1 <- ggplot(draws_df) +

geom_histogram(aes(`prior_preds0[1]`), fill = "red", alpha = 0.3, bins = 30) +

geom_histogram(aes(`posterior_preds0[1]`), fill = "blue", alpha = 0.3, bins = 30) +

geom_histogram(aes(`posterior_preds1[1]`), fill = "green", alpha = 0.3, bins = 30) +

geom_histogram(aes(`posterior_preds2[1]`), fill = "purple", alpha = 0.3, bins = 30) +

labs(title = "Prior and Posterior Predictive Distributions",

subtitle = "Red: prior, Blue: no memory effect, Green: neutral memory, Purple: strong memory",

x = "Predicted Right Choices (out of 120)",

y = "Count") +

theme_classic()

# Display plots

p1

# Individual-level parameter recovery

# Extract individual parameters for a sample of agents

sample_agents <- sample(1:agents, 100)

sample_data <- d %>%

filter(agent %in% sample_agents, trial == 1) %>%

dplyr::select(agent, bias, beta)

# Extract posterior means for individual agents

bias_means <- c()

beta_means <- c()

for (i in sample_agents) {

bias_means[i] <- mean(draws_df[[paste0("biasID[", i, "]")]])

beta_means[i] <- mean(draws_df[[paste0("betaID[", i, "]")]])

}

# Create comparison data

comparison_data <- tibble(

agent = sample_agents,

true_bias = sample_data$bias,

est_bias = bias_means[sample_agents],

true_beta = sample_data$beta,

est_beta = beta_means[sample_agents]

)

# Plot comparison

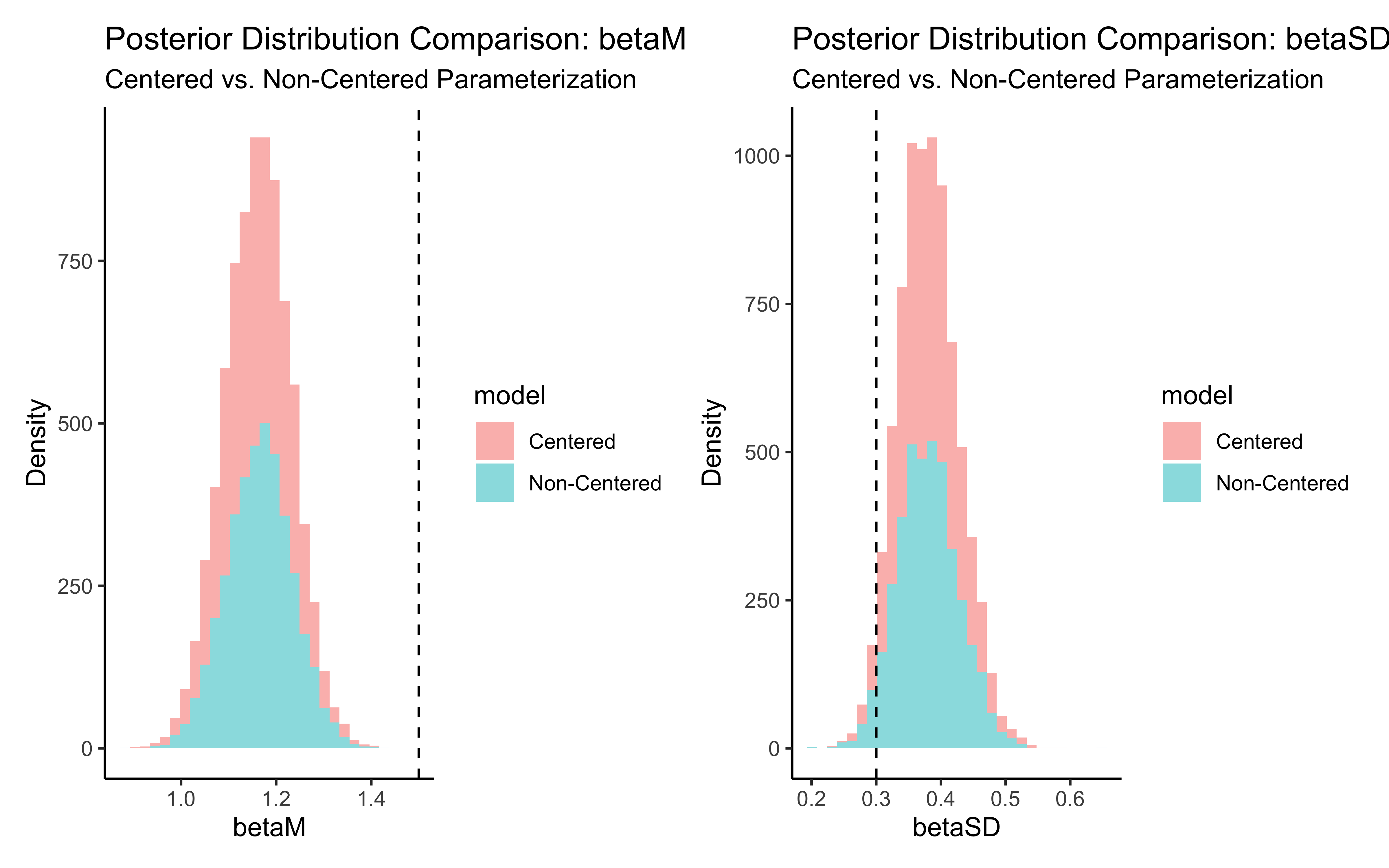

p1 <- ggplot(comparison_data, aes(true_bias, est_bias)) +

geom_point(size = 3) +

geom_abline(intercept = 0, slope = 1, linetype = "dashed") +

geom_smooth(method = lm) +

labs(title = "Bias Parameter Recovery",

x = "True Bias",

y = "Estimated Bias") +

theme_classic()

p2 <- ggplot(comparison_data, aes(true_beta, est_beta)) +

geom_point(size = 3) +

geom_abline(intercept = 0, slope = 1, linetype = "dashed") +

geom_smooth(method = lm) +

labs(title = "Beta Parameter Recovery",

x = "True Beta",

y = "Estimated Beta") +

theme_classic()

# Display parameter recovery plots

p1 + p2

7.12.5 Multilevel memory with correlation between parameters

stan_model_nc_cor <- "

//

// This STAN model infers a random bias from a sequences of 1s and 0s (heads and tails)

//

functions{

real normal_lb_rng(real mu, real sigma, real lb) {

real p = normal_cdf(lb | mu, sigma); // cdf for bounds

real u = uniform_rng(p, 1);

return (sigma * inv_Phi(u)) + mu; // inverse cdf for value

}

}

// The input (data) for the model.

data {

int<lower = 1> trials;

int<lower = 1> agents;

array[trials, agents] int h;

array[trials, agents] int other;

}

// The parameters accepted by the model.

parameters {

real biasM;

real betaM;

vector<lower = 0>[2] tau;

matrix[2, agents] z_IDs;

cholesky_factor_corr[2] L_u;

}

transformed parameters {

array[trials, agents] real memory;

matrix[agents,2] IDs;

IDs = (diag_pre_multiply(tau, L_u) * z_IDs)';

for (agent in 1:agents){

for (trial in 1:trials){

if (trial == 1) {

memory[trial, agent] = 0.5;

}

if (trial < trials){

memory[trial + 1, agent] = memory[trial, agent] + ((other[trial, agent] - memory[trial, agent]) / trial);

if (memory[trial + 1, agent] == 0){memory[trial + 1, agent] = 0.01;}

if (memory[trial + 1, agent] == 1){memory[trial + 1, agent] = 0.99;}

}

}

}

}

// The model to be estimated.

model {

target += normal_lpdf(biasM | 0, 1);

target += normal_lpdf(tau[1] | 0, .3) -

normal_lccdf(0 | 0, .3);

target += normal_lpdf(betaM | 0, .3);

target += normal_lpdf(tau[2] | 0, .3) -

normal_lccdf(0 | 0, .3);

target += lkj_corr_cholesky_lpdf(L_u | 2);

target += std_normal_lpdf(to_vector(z_IDs));

for (agent in 1:agents){

for (trial in 1:trials){

target += bernoulli_logit_lpmf(h[trial, agent] | biasM + IDs[agent, 1] + memory[trial, agent] * (betaM + IDs[agent, 2]));

}

}

}

generated quantities{

real biasM_prior;

real<lower=0> biasSD_prior;

real betaM_prior;

real<lower=0> betaSD_prior;

real bias_prior;

real beta_prior;

array[agents] int<lower=0, upper = trials> prior_preds0;

array[agents] int<lower=0, upper = trials> prior_preds1;

array[agents] int<lower=0, upper = trials> prior_preds2;

array[agents] int<lower=0, upper = trials> posterior_preds0;

array[agents] int<lower=0, upper = trials> posterior_preds1;

array[agents] int<lower=0, upper = trials> posterior_preds2;

biasM_prior = normal_rng(0,1);

biasSD_prior = normal_lb_rng(0,0.3,0);

betaM_prior = normal_rng(0,1);

betaSD_prior = normal_lb_rng(0,0.3,0);

bias_prior = normal_rng(biasM_prior, biasSD_prior);

beta_prior = normal_rng(betaM_prior, betaSD_prior);

for (i in 1:agents){

prior_preds0[i] = binomial_rng(trials, inv_logit(bias_prior + 0 * beta_prior));

prior_preds1[i] = binomial_rng(trials, inv_logit(bias_prior + 1 * beta_prior));

prior_preds2[i] = binomial_rng(trials, inv_logit(bias_prior + 2 * beta_prior));

posterior_preds0[i] = binomial_rng(trials, inv_logit(biasM + IDs[i,1] + 0 * (betaM + IDs[i,2])));

posterior_preds1[i] = binomial_rng(trials, inv_logit(biasM + IDs[i,1] + 1 * (betaM + IDs[i,2])));

posterior_preds2[i] = binomial_rng(trials, inv_logit(biasM + IDs[i,1] + 2 * (betaM + IDs[i,2])));

}

}

"

write_stan_file(

stan_model_nc_cor,

dir = "stan/",

basename = "W6_MultilevelMemory_nc_cor.stan")

model_file <- "simmodels/W6_MultilevelMemory_noncentered_cor.RDS"

# Check if we need to rerun the simulation

if (regenerate_simulations || !file.exists(model_file)) {

# Compile the model

file <- file.path("stan/W6_MultilevelMemory_nc_cor.stan")

mod <- cmdstan_model(file, cpp_options = list(stan_threads = TRUE),

stanc_options = list("O1"))

# Sample from the posterior distribution

samples_mlvl_nc_cor <- mod$sample(

data = data_memory,

seed = 123,

chains = 2,

parallel_chains = 2,

threads_per_chain = 1,

iter_warmup = 2000,

iter_sampling = 2000,

refresh = 500,

max_treedepth = 20,

adapt_delta = 0.99

)

# Save the model results

samples_mlvl_nc_cor$save_object(file = model_file)

cat("Generated new model fit and saved to", model_file, "\n")

} else {

# Load existing results

samples_mlvl_nc_cor <- readRDS(model_file)

cat("Loaded existing model fit from", model_file, "\n")

}7.12.6 Assessing multilevel memory

# Check if samples_biased exists

if (!exists("samples_mlvl_nc_cor")) {

cat("Loading multilevel non centered correlated model samples...\n")

samples_mlvl_nc_cor <- readRDS("simmodels/W6_MultilevelMemory_noncentered_cor.RDS")

cat("Available parameters:", paste(colnames(as_draws_df(samples_mlvl_nc_cor$draws())), collapse = ", "), "\n")

}

# Show summary statistics for key parameters

print(samples_mlvl_nc_cor$summary(c("biasM", "betaM", "tau[1]", "tau[2]", "L_u[2,2]")))## # A tibble: 5 × 10

## variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 biasM -0.536 -0.538 0.217 0.224 -0.885 -0.175 1.00 1812. 2564.

## 2 betaM 3.11 3.11 0.265 0.268 2.66 3.54 1.00 1522. 2250.